QUIC Analysis - A UDP-Based Multiplexed and Secure Transport

Introduction

The goal of this article is to do the analysis of the QUIC protocol, how it works, what are its advantages/disadvantages and what kind of impacts it will have on the network world.

QUIC is a new connection-oriented protocol that creates a stateful interaction between a client and server. It modernizes completely the network transport layer by bringing a set of new functionalities.

1. QUIC (RFC 9000)

QUIC is a new transport protocol based on UDP published on the RFC 9000 (May 2021). QUIC was originally design by Google (around 2012) to support HTTP/3, but it became a standard transport protocol. Currently it also supports DNS, SMB and others will arrive.

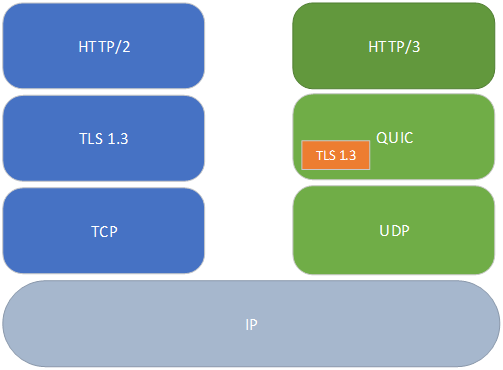

If we compare HTTP/2 (based on TCP) and HTTP/3 (based on QUIC) we will have this result:

On HTTP/2 there is the TCP stack which manages the end-to-end session establishment but also the reliability and the congestion control. There is the TLS stack which manages peers’ authentication, integrity, and confidentiality of the data. Then the HTTP stack which manages the application data exchange.

On HTTP/3 there is the UDP stack which manages end-to-end session establishment. Then there is the QUIC stack. QUIC manages the streams multiplexing, the reliability, the congestion control, and security by including the TLS stack. Then the HTTP stack manages the application data exchange.

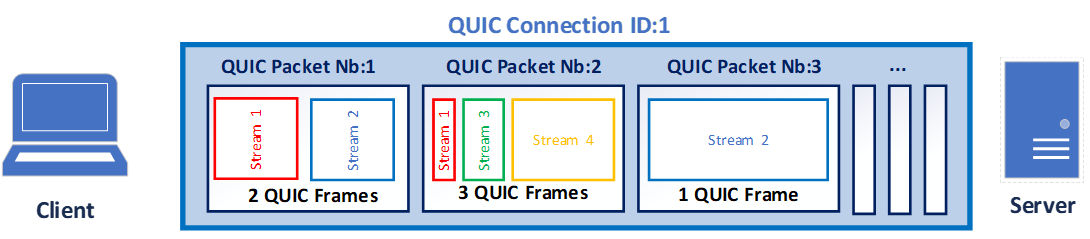

QUIC protocol runs over UDP (layer 4 protocol). UDP transports QUIC “packets” (one or more per datagram). QUIC “packets” encapsulate QUIC “frames”. From the network naming convention, it’s a little bit mixed: an Ethernet frame encapsulates a IP packet which encapsulates a UDP datagram which encapsulates one or several QUIC packets that encapsulates one or several QUIC frames that contain the application data.

QUIC opens a connection between a server and a client. In this connection there is one or multiple QUIC streams. The QUIC connections manage the QUIC packets transport between endpoints, and the QUIC streams manage data exchange. The QUIC packet is always composed of header one or multiple QUIC frame (ACK, CRYPTO, STREAM...).

QUIC uses long packet headers during connection establishment. It can be an Initial, 0-RTT, Handshake, Retry or a Version Negotiation. Once 1-RTT keys are available, a sender switches from long headers to short headers.

QUIC connection establishment

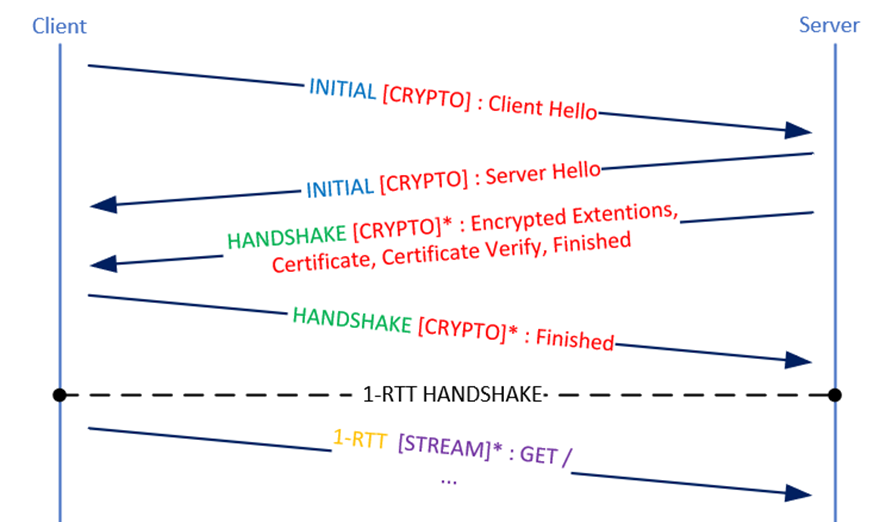

1-RTT handshake

The first handshake between a client and a server is called 1-RTT handshake (RTT : Round Trip Time). HTTP/2 needs for its first connection at least 3-RTT and for the second 2-RTT. In QUIC the initial connection takes only 1-RTT to establish the communication.

The first packet contains the Client Hello (TLS 1.3). TLS implementation is defined in RFC 9001. Then the server replies with the Server Hello and all security information (certificate…). This information is sent in several QUIC packets all at the same time. At this time, there is only one RTT done. The client finishes the handshake and begins to send application data encrypted(represented with a *) on a 1-RTT packet.

In the exchanges, ACK frame (acknowledgment) aren’t shown because it can be merged to the packet response or on a dedicated packet. Acknowledgment is described later.

Also, NT & DONE frame are hidden (which is in parallel of the exchanges after the client TLS Finished). NT frames correspond to NEW_TOKEN. This token will be used by the client on 0-RTT handshake. DONE frames indicate that the handshake is done. It is sent by the server.

0-RTT handshake

When a new connection is established, the client can established a connection instantly, it is called 0-RTT, sends a Client Hello (which can contain a client Token) and a 0-RTT frame which contains the application data encrypted with the previous PSK(represented with a *) (Pre-Shared Key). The client uses this old PSK for the first flight (“early data”) but negotiates a new PSK(represented with a **) which will be used for the rest of the connection. The server can refuse this connection (for example if the PSK is revoked), if so then the client will proceed to do a 1-RTT handshake.

QUIC connection termination

An established QUIC connection can be terminated in one of three ways:

- IDLE timeout: the connection is closed if the max_idle_timeout is reached.

- Immediate close: An endpoint sends a CONNECTION_CLOSE frame. All streams are immediately closed. Violations of the protocol lead to an immediate close.

- Stateless reset: A stateless reset is provided as an option of last resort for an endpoint that does not have access to the state of a connection.

In most of the case the IDLE timeout is the option taken. It’s different from the TCP session, where a FIN or a RST means the session is closed. In QUIC we need to know the max_idle_timeout (present on the Client Hello and Encrypted Extensions, peers take the minimum value) and determine if the latest packet of the session reached this limit. What seems to be the default: 2 min server side and 30 s client side - Chrome/Firefox.

Acknowledgment

An endpoint MUST acknowledge all ack-eliciting Initial and Handshake packets immediately and all ack-eliciting 0-RTT and 1-RTT packets within its advertised max_ack_delay, with the following exception

Prior to handshake confirmation, an endpoint might not have packet protection keys for decrypting Handshake, 0-RTT, or 1-RTT packets when they are received. It might therefore buffer them and acknowledge them when the requisite keys become available

An endpoint that is only sending non-ack-eliciting packets might choose to occasionally add an ack-eliciting frame to those packets to ensure that it receives an acknowledgment

ACK frames SHOULD always acknowledge the most recently received packets

TCP bases its ACK on sequence number and length of the last contiguous packet received (Ack = Seq + Len). QUIC based its mechanism on 3 main fields:

- Largest Acknowledged = last packet number received.

- First ACK range = number of contiguous packets Acknowledged preceding the Largest Ack. This value can decrease depending on the number of packets treated by the application. This value restarts at 0 when packet loss occurred.

- ACK ranges = Used when there is a packet loss. Detail in the following section.

If there is no packet loss ACK ranges are not used, and the endpoint can consider all previous packet have been well received.

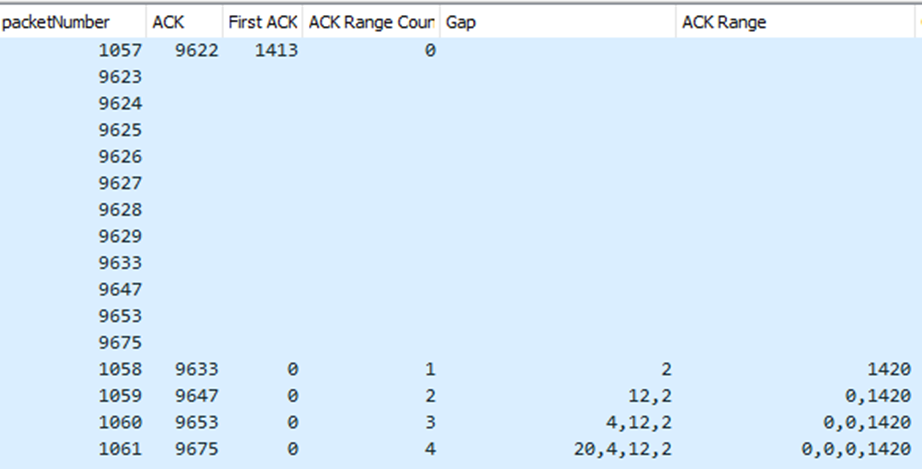

Packet loss detection

The packet loss detection is different from TCP. On QUIC if a packet is lost, the endpoint will add in the next ACK frame a ACK range which is composed of 2 fields:

- Ack Range length is the number of packets received from the last largest ACK. Ack Range Length = Number of received packets since the last largest ACK + First Ack range.

- Gap is the number of packet losses detected from the first packet loss. GAP=New Largest ACK - Last received packet before packet loss - 2.

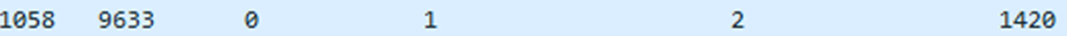

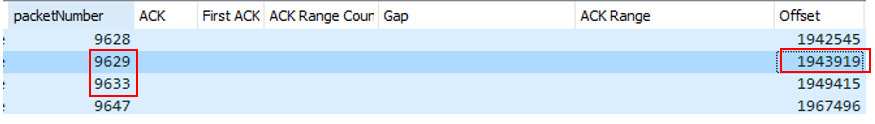

In this example, the are several packets lost. The client receives packet 9623 to 9629 without issue. Then packet 9630, 9631, 9632 are missing, then it receives the packet number 9633, at this moment the client sends an ACK and adds ACK range.

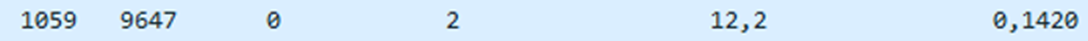

Then the client receives packet 9647, so it detects also packet loss. It sends a ACK with an ACK range count = 2 (because the first ACK range is not yet validated).

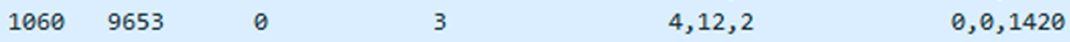

Then the client receives packet 9653, so there is new packet loss. The client sends a new ACK with the two previous ack range values

Then the client receives packet 9675, with new packet loss. The client sends a new ACK with the three previous ack range values.

When the server detects that the client sends ACK with Ack range to inform it there were packets lost, it will retransmit the data in new packets number.

In this example, the server will decrease the Offset counter (in the Stream frame) to the value of the missing packet, and it will add the data retransmitted.

At this moment the client acknowledges the new packet and remove one ack range. Ack range count changes from 8 to 7. Then there are two options depending on the nature of the exchanges:

- The server can wait for the end of the stream before sending all missing packets detected by the client. A file download can match this example. In this case, the client can finish the stream with an Ack ranges count of 40 or more.

- Another possibility is the server can retransmit some packets missing and at the same time send other packets. The Ack range count will decrease progressively, but if a new packet loss occurs the counter can increase again…

OUT of Order detection

Endpoints MUST be able to deliver stream data to an application as an ordered byte stream. Delivering an ordered byte stream requires that an endpoint buffer any data that is received out of order, up to the advertised flow control limit

QUIC Stream

A QUIC stream encapsulates data sent by an application. QUIC can generate multiple parallel streams in a QUIC connection. All steams are independent, they manage their flow control and their lost data retransmission. A stream can be unidirectional or bidirectional, generated by the client or the server.

Streams are identified by the stream ID. A stream ID is unique for all streams on a connection. A QUIC endpoint MUST NOT reuse a stream ID within a connection

Stream termination

Streams can be ended when the QUIC connection is closed, or if an endpoint closes it. There are two ways:

- FIN flags in the header are activated to stop the stream (clean - Most common)

- RESET_STREAM frame to stop immediately stream (abrupt – association with the field Application Protocol Error Code)

Flow Control

Flow control is a mechanism present on TCP and very similar in QUIC.

Receivers need to limit the amount of data that they are required to buffer, in order to prevent a fast sender from overwhelming them or a malicious sender from consuming a large amount of memory

Streams are flow controlled both individually and across a connection as a whole

Similarly, to limit concurrency within a connection, a QUIC endpoint controls the maximum cumulative number of streams that its peer can initiate

Another RFC (9002) defines this mechanism.

2. Performances

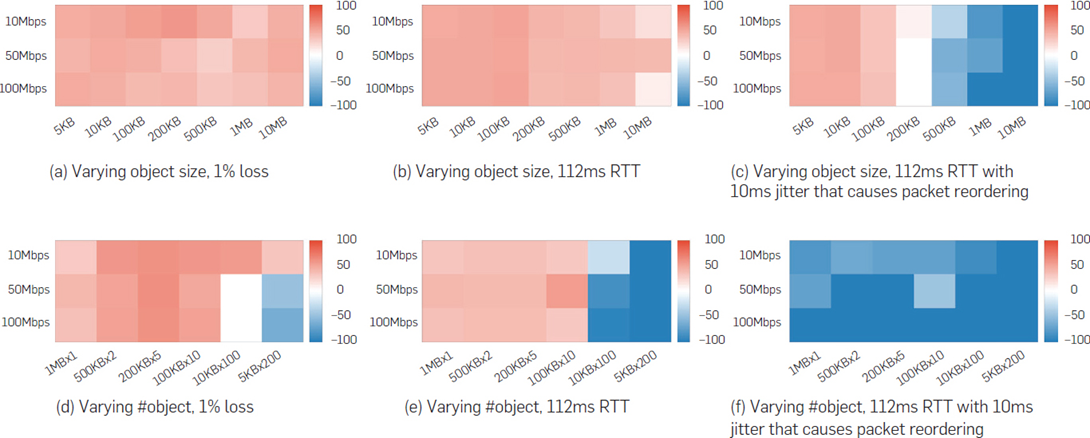

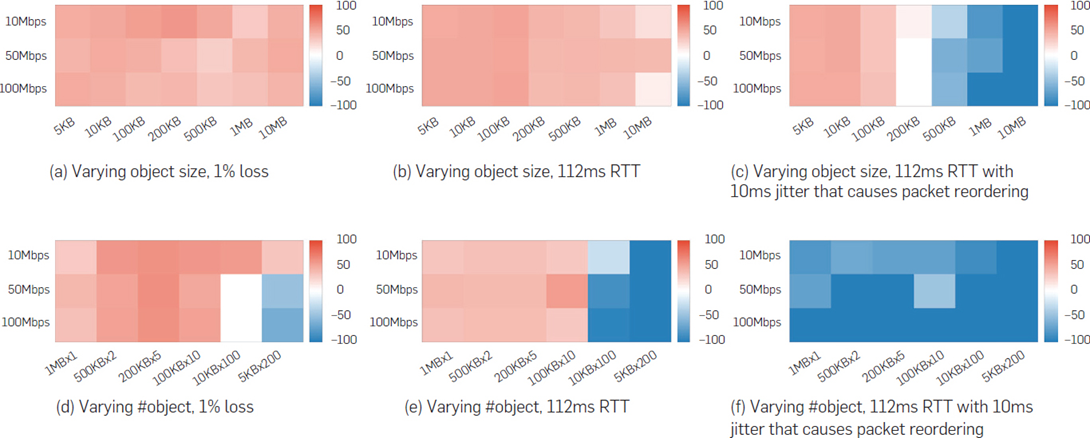

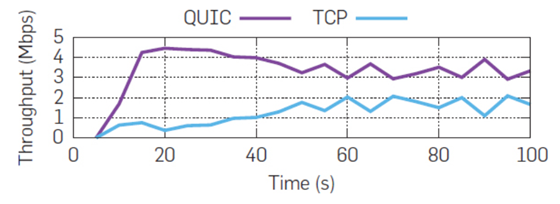

This topic is based on existing studies results. There are 3 interesting studies that tested QUIC (when it was still in draft).In this study, the authors compare the page load time, between HTTP2 over QUIC and HTTP2 over TCP/TLS, in a basic situation (no packet loss, jitter or high latency). The results are clearly in favor of QUIC. Except when there are more than 100 objects to download.

Then the authors used different connection parameters (packet loss, latency, jitter). They combined all possibilities, and the results are provided through these charts.

We can see QUIC in most of the situation is faster than TCP. High latency and packet loss situation are favorable to QUIC. The weakness of QUIC is the jitter (Packets out of order), but this situation is less common than high latency & packet loss in the networks. Finally, the author did simultaneous tests on a limited link (5Mbps, 36ms RTT, buffer 30KB) to increase the trend of the results and detect unfair comportments.

QUIC vs QUIC or TCP vs TCP: flows are fair to each other.

TCP vs QUIC: QUIC is unfair.

QUIC increases its window more aggressively (both in terms of slope, and in terms of more frequent window size increases)

In this second study the authors confirm the results provided on the study above. QUIC is faster than TCP when packet loss occurred. The performance results on other tests are quite similar. The third study confirms the results of the last two studies. It’s normal that QUIC is faster than TCP on poor network situation thanks to the improvement of the latency session establishment, the PTO timer adjustment, the multiple packet loss ranges, the high frequency of congestion window updates and the UDP benefits on stream packet loss. The QUIC performances are also impacted due to several aspects. The first one is the protocol overhead impacts. In this other study, the author gives a good vision of the impact of the protocol. In order to transfer 39 application bytes, TCP/TLS used 2,5KB rather than 4KB for QUIC during handshake. Then a QUIC short header packet is 35 bytes vs 32 bytes in TCP, there is not a huge difference. In this study however, the author compares just 39 bytes transfers. So QUIC is always slower than TCP, it’s theoretically correct, but the amount of data is far from a real web page. The study is quite limited from this point of view. On the conclusion of the article, a good point is mentioned (for Linux env):

We think that the biggest disadvantage of QUIC in comparison to TCP+TLS is its execution in the user-space, because of the lower priority. If both are compared while executed in kernel-space, QUIC might perform better

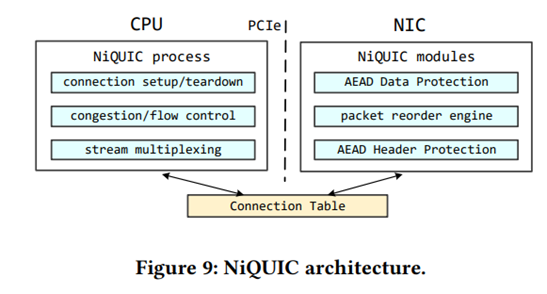

This study details the CPU utilization of QUIC and how it can be optimized. Currently QUIC run in user space and crypto operation are slow in this space. If theses operation were moved to the NIC (Network Interface Controller), data plane and packet reordering too the performances would be increased. The architecture proposed to improve this situation is as follows:

An article shows the difference in performances between various MTU values to fetch a 1GB file from HTTP/3 server.

- With standard MTU (1252) = 19,9s (Baseline)

- With path discovery mechanism (MTU = 1472) = 17,7s (11% faster) : Common environment (Standard MTU = 1500)

- With path discovery mechanism (MTU = 4096) = 13,0s (35% faster) : DataCenter environment (Jumbo frame = 9000)

We clearly understand that by default QUIC doesn’t use the full capacity of the links without path discovery mechanism. Firefox adds padding bytes in the Initial packet to test if a datagram of length 1399 works. If yes, by default Firefox can send more data than Chrome.

3. Protocol over QUIC

HTTP3

In 2015, the first protocol that had been developed over QUIC was HTTP/2. In 2018, it was renamed to HTTP/3 the successor of HTTP/2. QUIC has been successfully approved on RFC (detailed previously) and HTTP/3 just got its validation in June 2022 (RFC9114).

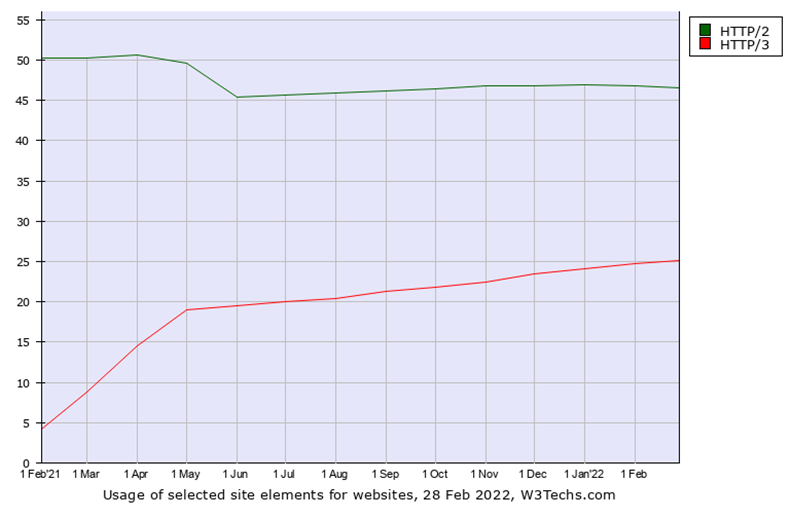

Currently HTTP/3 represents 25% of the global internet traffic with a good trend compared to HTTP/2:

The main websites using QUIC are all Google, Microsoft, and Facebook services. Netflix can migrate its services to support QUIC but a lot of devices (TV connected) do not support the protocol.

Even if a lot of websites are waiting for the official HTTP/3 RFC, there is 25% of the global Internet which is based on a (advanced status) draft with a progressive trend.

In the enterprise space, this protocol should be limited (or absent) due to the draft status and above all the security and monitoring impacts of QUIC (detailed on the next section). Apache still does not support QUIC, but Web servers like NGINX (nginx-quic), Quiche or LiteSpeed are supporting it.

HTTP/3 has been validated this month, all Web servers will support it in a near future. We can also imagine in the future (due to the performances and functional advantages of QUIC) a craze to implement it for Web applications.

DNS

Another important protocol linked to HTTP/3 is the DNS. There are two aspects with this protocol:

- DNS over QUIC (DoQ) to secure exchanges and anonymize the client DNS requests.

- DNS protocol evolution so that it meets the needs of QUIC.

The first aspect is defined in an IETF draft. DoQ will use another UDP port (UDP/853 to be validated). Chrome also use DNS over HTTP which will use HTTP/2 then HTTP/3 so QUIC in the end. The browser will manage the DNS requests without using DNS servers defined on the system. This second point is of key importance for QUIC. The second part is an evolution of DNS protocol called DNS SVCB and HTTPS RRs. It instantiates SVCB record with a new service HTTPS and adds parameters.

example.com 3600 IN HTTPS 1 . alpn=”h3,h2” ipv4hint=”192.0.2.1” ipv6hint=”2001:db8::1”

In this example, for SVCB HTTPS the DNS response contains the IP addresses (v4 & v6) but also the HTTP version supported. The client can directly contact the server in HTTP3 (without passing through HTTP2 as described previously).

SMB

QUIC is also used on Windows 2022 server to encapsulate the Samba protocol.

SMB over QUIC offers an "SMB VPN" for telecommuters, mobile device users, and high security organizations. All SMB traffic, including authentication and authorization within the tunnel is never exposed to the underlying network.

Future

QUIC will not just arrive for Web applications using HTTP, but also for a lot of other applications. We can imagine SSH over QUIC (no disconnection and performance improvements in data transfer over SSH). We can imagine NTP over QUIC (to secure exchanges), LDAP over QUIC (to benefit from the 0-RTT)… in fact all protocols can migrate to this new transport protocol. On the QUIC website there are a lot of in-progress documents that show an ever-growing list of QUIC implementations and in fact it seem to just be the beginning of deep network landscape changes.

4. Future concerns

Impact on network troubleshooting & monitoring

If QUIC takes much more importance in the future, the troubleshooting of the network engineers will be deeply modified. On the well-known Wireshark, it’s possible for a network engineer to troubleshoot all TCP exchanges: Detect packet loss, retransmission, congestion issues, out of order… With QUIC (without the session keys) most of the flows are encrypted and parameters used for the troubleshooting is hidden.

Take one example, a mobile user who was connected on Wifi and switches to LTE… He will change his IP address, and create a new session with 0-RTT (all encrypted), it’s impossible to determine that it is the same user without session keys.

All network monitoring solutions (NPMD) will be limited. SNI (Server Name Indication) that allows a middlebox to know the destination of the connection without decrypting the connection is no more possible in 0-RTT mode (due to TLS 1.3). Certificate analysis is impossible (due to TLS 1.3). The tracking of packet loss, latency, jitter, out of order, congestion window, etc. is impossible. The NPMD solution will just be able to analyze: throughput, packet rate, connection ID, TLS handshake (only for the first connection) and that’s all.

Currently none of the NPMD solutions have specific QUIC profile (follow QUIC connection ID…). If, in the enterprise space, the DNS evolves over QUIC it will become a nightmare to monitor DNS requests/responses. We can imagine the same kind of difficulties for all protocols which will migrate to QUIC.

Impact on security equipment

QUIC also has a huge impact on network security devices. It’s why all security devices providers will slow down the deployment of QUIC.

Firewall

- Checkpoint: NO QUIC security profile. (Recommended to block it)

- Cisco: NO QUIC security profile.

- Palo Alto: NO QUIC security profile. (Recommended to block it)

- Fortinet: NO QUIC security profile. (Recommended to block it)

- Stormshield: NO QUIC security profile.

All main firewall brands do not yet support the QUIC protocol. The protocols represented 5% when it was still in draft and now 25% (and growing), so it definitely looks like a lack of anticipation from these actors.

Proxy

F5: Already supported.

Conclusion

F5’s article conclusion

QUIC has broad industry support and the potential to be the basis of most applications that deliver business value over the internet. Anyone delivering applications over the internet should start thinking about how their operations should change to reflect the new threats and opportunities that these protocols bring.

This is also a good conclusion for this analysis.