Securing a Virtual Desktop Environment in a Public Cloud - Part 1

At Michelin, like most organizations, COVID-19 brought us an increased need and usage of Virtual Desktop Infrastructure (VDI) in a Public cloud for remote users. As we began providing virtual desktops to more and more users, a small concern in the beginning began to grow bigger each day. As we increased the number of users and the different user types on our VDI solution we were exposing a concern that we would need to get under control quickly. The concern was how to secure the flow coming out of our VDI environment into our company network while still allowing the access required (secure yet not impacting the user experience). This post will detail the concern and how we remediated it.

The Starting Point

As I mentioned, COVID-19 and work at home were a catalyst for us to ramp up our VDI environment. We had to quickly solution VDI environments to support different use cases such as:

- Developers

- Light Office Workers

- Medium Office Workers

- Merger & Acquisitions

- Contractors

- ...

While creating these 'pools' for each use case we also implemented a multi-factor authentication at the front door. The concept was to ensure that we knew and controlled who was accessing our VDI infrastructure in this public cloud. We felt that we had secured the 'front door' to the environment as much as possible without really impacting the end user experience.

The Challenge

The challenge in front of us was how to ensure that there was sufficient security on the VDI instances to protect Michelin resources but still enable the end user to connect to what they needed in order to perform their job function. In addition we also needed to think about containment so east-west flows (vm to vm inside cluster) could not happen whether be it from malicious activities, a virus or just because.

This solution needed to be realized before we had too many users accessing our VDI infrastructure that the implementation would impact the user's work. To be clear, this security layer should have been thought about in the beginning and part of the initial deployment of our VDI infrastructure. Unfortunately, we don't always think about security first...

Options

Luckily we are not the first organization to be in the position so there are options. The two that I will focus on here are Azure Network Security Groups (NSG) and VMware NSX Cloud as both appear to meet the need.

Azure NSG is a cloud native option that should allow us to control flows at the Virtual Machine level within the VDI infrastructure. It is already a standard tool used by our cloud competency team. It is also already controlled/managed though automation.

VMware NSX Cloud would allow us to extend our current on-premise management plane to our Azure VDI instance through the deployment of a Cloud Services Manager and a Public Cloud Gateway. Enabling this extension to the Public cloud allows us to leverage existing on-premise data center objects already defined in our NSX management platform and utilize them with our VDI in Azure platform.

We attempted to put NSGs at the VM level but ran into issues each time. This could be from lack of understanding, lack of knowing flows required, technical challenges, all or none of the above. What ever the reason this was abandoned as an option and they were deployed at the network interface level instead.

We decided to design and install a Proof of Concept (PoC) using NSX Cloud on our INDUS VDI platform in Azure in hopes it would be something we could layer on top of NSG and provide more security.

Design

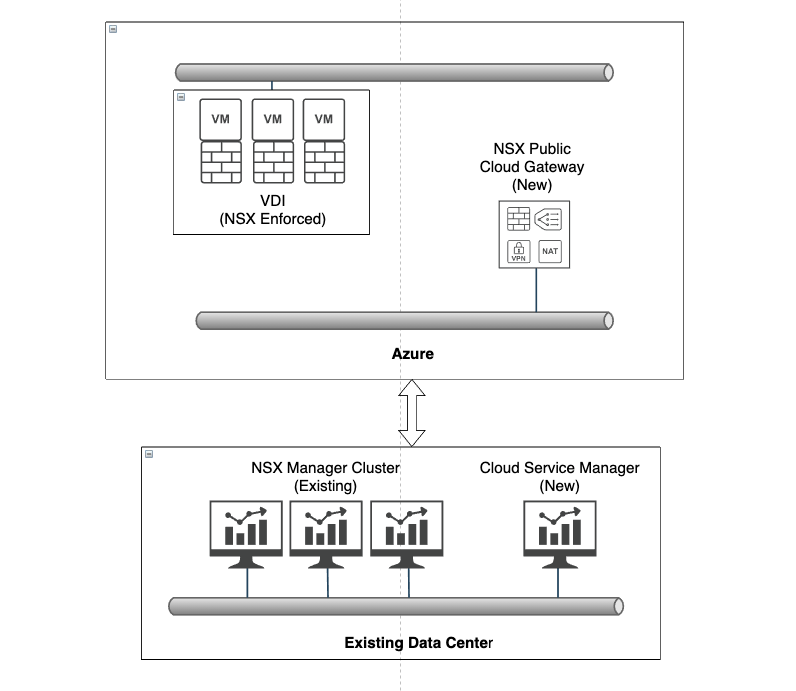

The design for NSX is fairly straightforward assuming you already have a NSX Management platform. Our over simplified design looks like this:

By adding a Cloud Service Manager (CSM) at our local data center and Public Cloud Gateway (PCG) in Azure we can manage our VDI environment in Azure using our NSX Management platform in the local data center (Noted by (New) in the above diagram). At the writing of this article having CSM in Azure was not possible but I have heard it's on the roadmap and soon you should be able to provision both the CSM and PCG directly in Azure if that is what you need/want.

Target Use Case for PoC

Our initial target use case for this PoC is external users that are not in our current trusted partner ecosystem. Maybe it's a temporary contractor or a maintenance support person. They are not part of our normal partner ecosystem but we still need to give them access to either a specific application or a desktop with restricted access. This seemed like a simple enough use case to test NSX Cloud with. Provision an external user with a VDI desktop and then layer on the appropriate security controls to ensure that the user can only access the host system(s) that were required and prevent any east-west movement within the solution.

For our PoC we will create a pool of virtual machines and some dedicated ones. The test users accessing these VMs would need access to one internal platform on specified ports. This access should be allowed and all inbound (North-South) other traffic (connection attempts) should be denied as well as any connection attempt from VM to VM (East-West) within the pool. This control will be enforced by the NSX agent that is installed on each vm and managed by the NSX Manager platform from the existing data center

Preparation

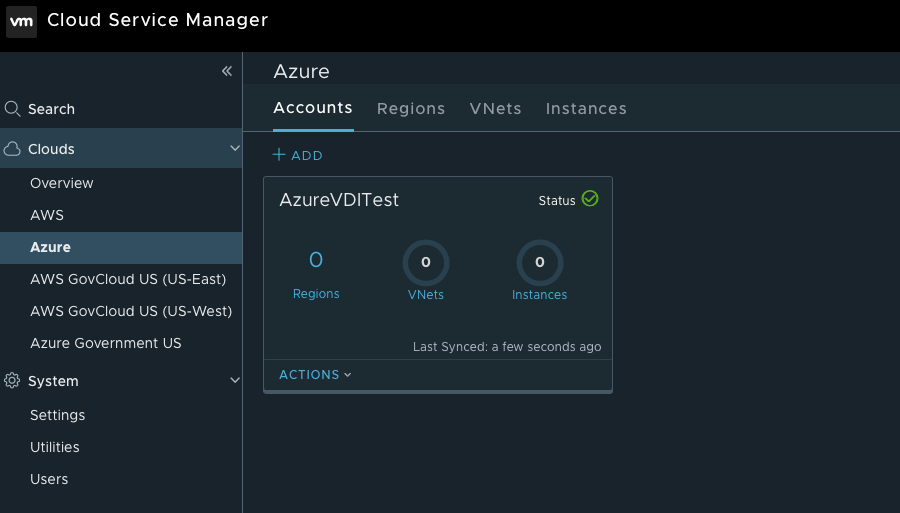

To get the environment ready we first had to deploy a CSM which we did and paired with our lab NSX Manager platform. Deployment of the CSM was fairly straightforward and this is what it looks like prior to having a PCG deployed:

Next we needed to generate service principal and roles in our Azure tenant and this is where things got...interesting. It is needed as the CSM makes the deployment of the PCG to the cloud environment, which in our case as I mentioned is Azure. VMware has a good document describing the steps (located here) but there are some key items I discovered through trial and error that I think are assumed. In order to save someone else the same pain I experienced here are some tips:

- Ensure you have loaded Az.Accounts or you could see an error message when running Enable-AzureRmAlias in PowerShell.

- Make sure your account has the right privileges to be able to create a service principal and roles

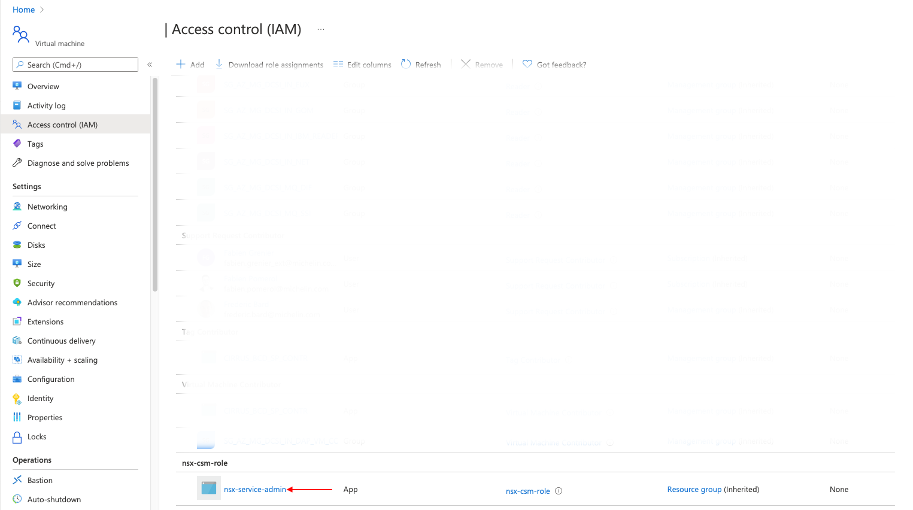

Creating the service principal and required roles deployment of the PCG was fairly straightforward. Here you can see the service principal nsx-service-admin noted by the red arrow:

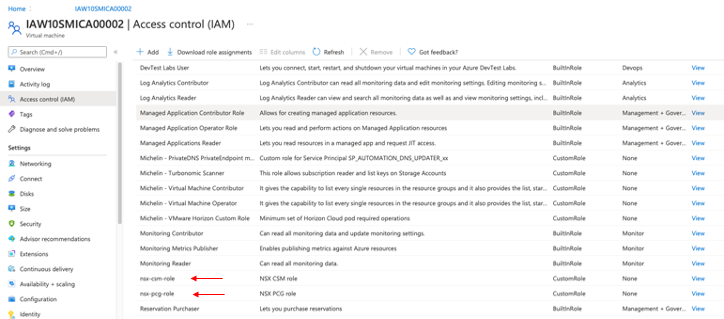

The roles created were straightforward as well and are shown below and noted by the red arrows.

Next we had to link our CSM with our Azure Subscription so that we could deploy the PCG. Interesting point to note is the initial call from CSM to Azure is via login.microsoftonline.com. (WARNING : Firewalls that do HTTPS inspection may present a problem for you.) For security reasons we limited the access of the service principal and did not allow it at the subscription level but only on specified network groups. The results were not good as shown below:

We could connect to Azure but we could not see any VNets or Instances. This appears to have not been a good idea to restrict access lower than subscription level. According to VMware:

"You cannot downgrade the permissions to lower levels. We require all of them in order to perform operations in NSX Managed VNETs. We don’t stray into any other non-managed VNETs so the permission scope is constrained to what the CSM is managing.

Given that we enforce security, we need the ability to perform CRUD operations on security groups, update VMs, etc and for the CSM we need to be able to deploy the PCG and update it so we need the ability to perform CRUD operations on VMs, copy VHDs, etc.

These permissions are as granular and restrictive as we can get for the solution to function."

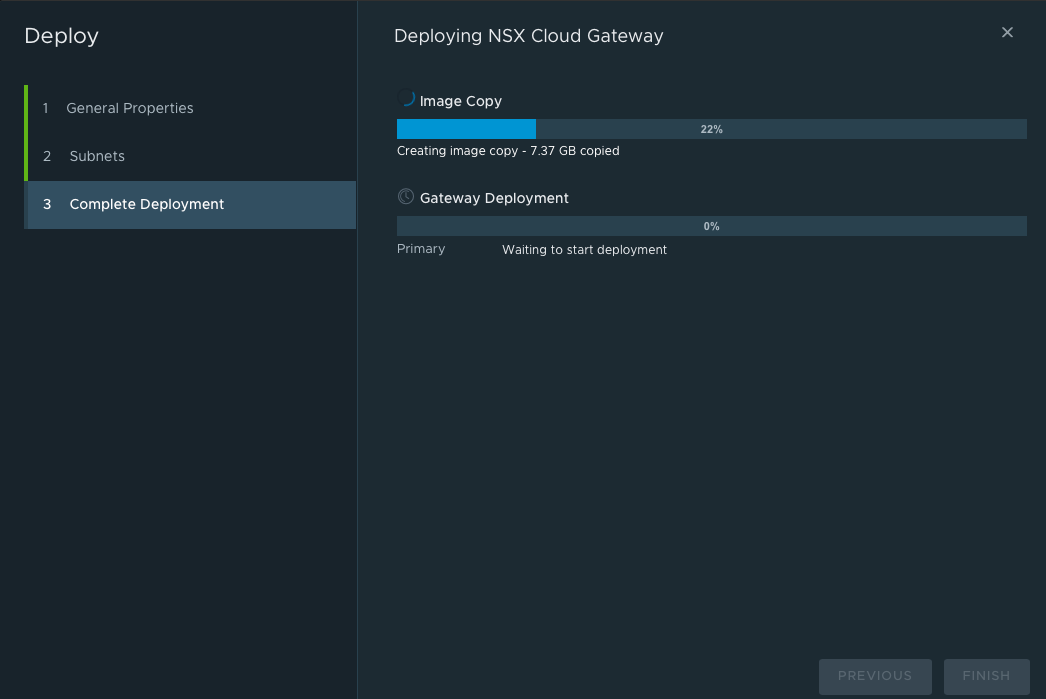

So, it looks like we need to leave the permissions the way the scripts create them or manually set them. We chose to manually set them and added each time we encountered an issue. We eventually got the point of being able to copy the image as shown below:

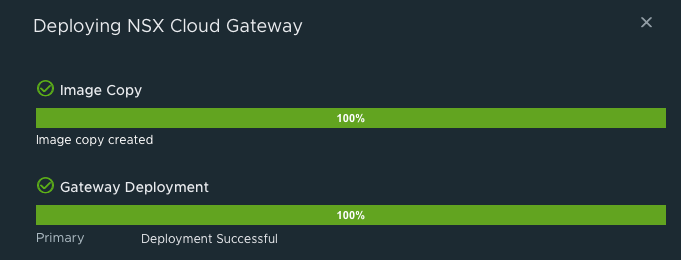

The next problem encountered was during the Gateway deployment (The Image Copy was successful). This continually failed because the service principal and roles still did not have the right accesses at the subscription level. We finally had to give up and just allow the script to create the service principal and roles at the subscription level. This allowed us to successfully deploy a gateway as shown below:

The next step was to integrate NSX Tools with our master image for creating desktops in our VDI environment.

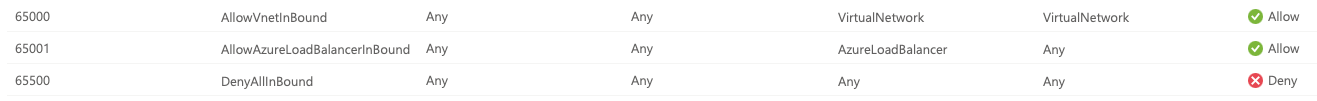

Interestingly enough when we deployed the PCG to our environment, any VM that was not marked as User Managed had a new NSG created and applied that was basically a stripping of the old one and a new rule first rule added with Priority 4096 and was a Deny All. Example below shows the NSG but after we removed Priority 4096 rule (I forgot to get original screenshot that included this rule):

We tried to recover the VDI service by undeploying the PCG but that did not change the NSG's assigned to the virtual network interface cards (vNIC). We had to manually detach the 'bad' NSG from the vNIC and manually add the correct NSG for each vm in order to restore the service.

In Summary

It has been challenging to get NSX Cloud deployed and working in our cloud VDI environment but to be fair, I feel that most of the challenges are due to our internal processes.

In Part 2, which I hope to publish within a couple of weeks, I will detail how (if) we worked through these issues, what they were, and how we provided more security on our cloud VDI environment along with the lessons we learned.

Special Thanks

A special thanks to Alexis Plantin and Okan Aslaner for their help with Azure, Mike Wilder for his help with NSX and Jean-Francois Couturier and Alexis Mimran from VMWare for their guidance and patience.