Vulnerability : at the springs of the Styx

What is vulnerability and why it is so crucial for cyber risks management.

You said vulnerability ?

Vulnerabilities are weaknesses which can be exploited by threat actors to affect the availability, confidentiality or integrity of a network or a system (middleware, API, application ...). Even if they are mainly the result of the inattention, the inexperience, the incompetence, or the negligence of the people charged with design, build or maintenance of the cyberspace components, they can sometimes be produced by malice. In this specific case, they are named backdoors. Just like other software bugs, vulnerabilities are a part of the technical debt.

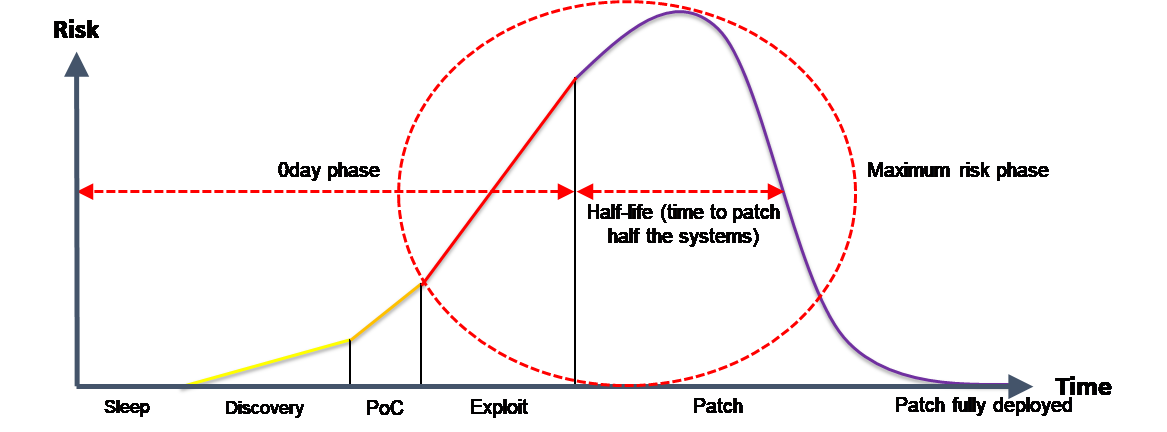

A vulnerability lifecycle is usually divided into five phases:

- Sleep: the vulnerability is there but unknown.

- Discovery: the vulnerability is known and studied.

- Proof of Concept: the vulnerability exploitation has been demonstrated.

- Exploit: the vulnerability is exploited in the wild. Bypasses may be available to mitigate it.

- Patch: the vulnerability can be definitively fixed, generally through a patch.

Surprisingly (or not), as shown by Qualys CTOs (Gerhard ESCHELBECK in 2004 and Wolfgang KANDEK in 2009), the persistence of a vulnerability into an information system during the Patch phase follows an exponential decay law with an half-life value which depends on its criticality and the security maturity of the IS/IT team (manifestly better in Service and Finance industries than in Manufacturing ones).

A 0Day is a vulnerability exploited, but generally unknown to the community (particularly to the software owner) and then unfixed. Due to their incredible damage potential, 0Day exploits are likened to cyber weapons and commercially exploited through three kind of markets:

- The white market embraces the business built around penetration testing and bug-bounties. The white-hat community is rewarded to search vulnerabilities and allow defenders (generally software owners) to fix them. Responsible disclosure is the standard model in this environment.

- The grey market is constituted by traders who pay quite better than defenders and resell vulnerabilities, mainly to ‘legal’ offenders (generally state sponsored organizations and security forces). In this environment, the question of the political allegiance is crucial. Zerodium is the leader of this market.

- The black market is the illegal offenders’ kingdom (mainly cybercriminals even if some state sponsored organizations can make use of). It is undoubtedly the most profitable environment, but it is also a dangerous and lawless one.

Vulnerabilities nature can be classified in a two-dimensions space, with 3 levels and 2 scales:

- Definition level: the weakness is into the design (so all the implementations using it are vulnerable).

- At a global scale, it can be into a standard.

- At a local scale, it can be into a custom specification. - Implementation level: the weakness is into the code (so all the instances using it are vulnerable).

- At a global scale, it can be into a widely shared software or library.

- At a local scale, it can be into a custom code. - Instantiation level: the weakness is into the setting.

- At a global scale, it can be into a widely shared “best” practice or a default setting (ex: admin/admin).

- At a local scale, it can be into a custom setting.

Obviously, a local instantiation vulnerability is not as impacting as a global definition one. Here are several examples to illustrate the differences:

- POODLE [CVE-2014-3566] was a global definition vulnerability into the SSLv3 standard (RFC-6141). The TLS variant of POODLE [CVE-2014-8730] must be considered as a global implementation vulnerability only into the F5 Networks SSL library which did not respect the TLSv1.0 standard (RFC-2246).

- HEARTBLEED [CVE-2014-0160] was a global implementation vulnerability into the OpenSSL library, which is used by many solutions.

- The activation of SSLv2 (a very weak version of SSL/TLS standard) on a web server, since it is officially deprecated (March 2011) and disabled into the Apache default configuration, must be considered as a local instantiation vulnerability.

Where are we headed?

It is difficult to estimate the global trend on all kind of vulnerabilities, because:

- Local vulnerabilities are rarely inventoried.

- Implementation vulnerabilities can be partially detected through SAST (Static Application Security Testing) solutions, but this kind of tools is used by few software owners. Some SAST editors communicate statistics, but they are probably biased.

- Global vulnerabilities can be mostly detected through vulnerability scanners like DAST (Dynamic Application Security Testing) solutions if they are inventoried and publicly known in advance: the well-known MITRE CVE (Common Vulnerabilities and Exposures) is a famous and reliable global vulnerabilities inventory which is widely used. Some vulnerability scanners editors communicate statistics, but they are probably biased.

- Only penetration testing performed by skilled hackers can detect any kind of vulnerabilities on a given instance, but pentest reports are often confidential due to responsible disclosure (white market rules), or because of non-disclosure trade agreement (grey & black market). Some threat intelligence providers communicate statistics about dark web and black market offers, but they are probably biased.

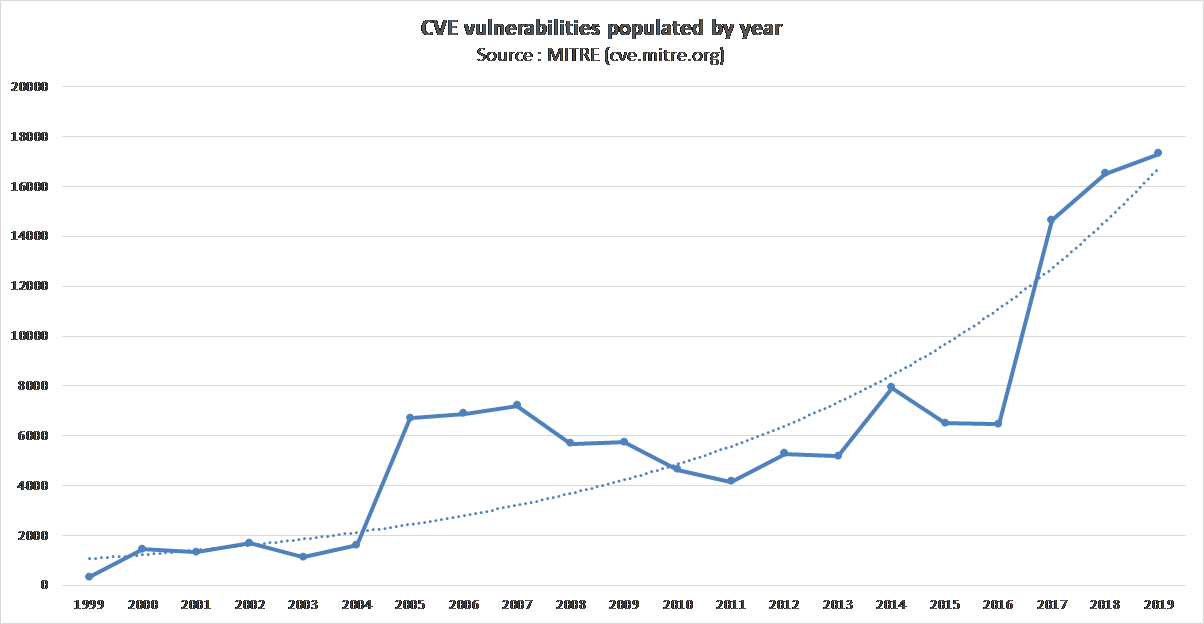

So, one of the most reliable way to estimate vulnerability trend is too study the evolution of the number of populated CVE. The trend curve has an exponential shape based on a CAGR (Compound Annual Growth Rate) of +14% between 2000 and 2019. This is quite huge, but how to explain that?

- MITRE is a nonprofit organization, and CVE well-known and considered as the reference for a long time. So, we can exclude a statistical manipulation or a specific hype phenomenon.

- There are no clear and tangible signs that today developers are worse than yesterday ones, generating more vulnerabilities per line of code than before. Technologies and computer languages evolve, but humans remain the same. Furthermore, there are signs suggesting that the biggest software companies (GAFAM for Google/Amazon/Facebook/Apple/Microsoft) have acknowledged cybersecurity as a strategical topic to secure their future: they train and sensitize their employees and customers, they develop innovating security technologies and standards (ex: FIDO Alliance), and they support or lead the white-hat community (ex: Google Project Zero). So, we should exclude a software quality degradation phenomenon at a global scale.

- The digital revolution is mainly a “softwarization” of all the business processes which were not yet (“Software is eating the world”). This transformation generates an obvious inflation of software quantity which may partially explain the vulnerability rise. This phenomenon is probably amplified by code reuse and sedimentation.

Accordingly, we can consider that vulnerabilities are like a natural resource:

- They occur spontaneously in the software: more software you have, more vulnerabilities you can get. It is analogous to an element present in earth’s crust (ex: Cu, Zn, Li …).

- Until they are discovered (Sleep phase), they are a potential resource. You must survey them (Discovery phase) to convert them into a reserve resource and develop them (PoC & Exploit phases) to get an actual resource. In the end, you deplete the resource when you fix them (Patch phase).

- We can even considered vulnerabilities as a renewable resource as they are spontaneously replenished by software production (new code) and maintenance (old code).

So, if we consider vulnerabilities as a natural resource, the actual resource volume is as far as related to the potential resource volume (which is itself related to software quantity), then to the global investment in survey and development. However, this investment is hard to estimate, because of white market fragmentation, and grey/black markets opacity.

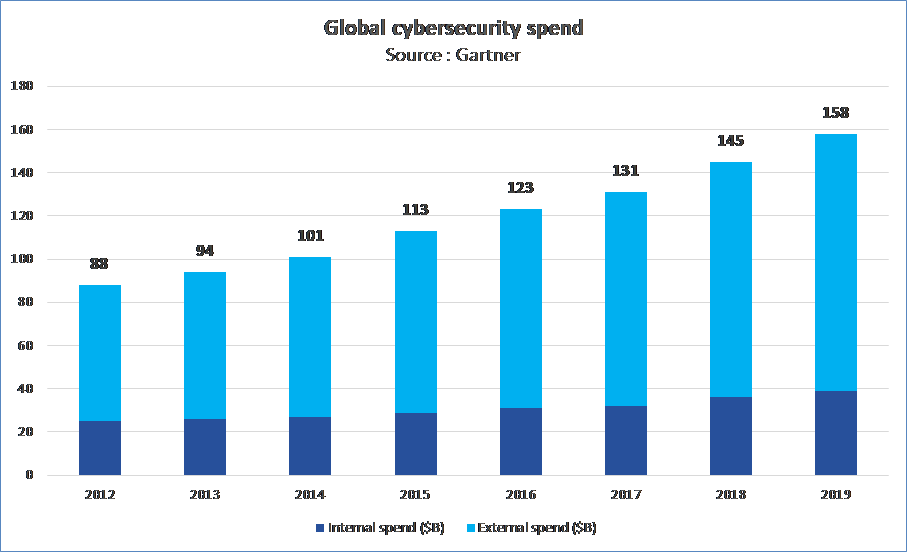

Nevertheless, it is widely agreed that cyber threats are in a constant endeavor of finding more vulnerabilities to discreetly penetrate IT systems. This endeavor creates an equal and opposite effort by legal organizations to develop and/or acquire cyber security products, solutions and services that minimize or cancel the effects of cyberattacks as effectively as possible. Then global spending in cyber security products, solutions and services saw an 8.8% CAGR between 2012 and 2019 (reaching $158B billion according to GARTNER) while CVE number saw a 18.5% CAGR during the same period.

So, we can finally suppose that half of the vulnerability growth is due to software inflation, and the other half is due to the survey investment increase.

So ... what else ?

Risks are usually modelized by the classical equation RISK = IMPACT x OCCURRENCE, where the IMPACT factor represents the damage related to the incident (generally the destroyed business value), and the OCCURRENCE factor represents the incident probability. As modern cyber risks are broadly caused by human malice rather than misfortune, the cyber risk OCCURRENCE can be decomposed into two sub factors:

- The THREAT which represents the malice source.

- The VULNERABILITY which represents the information system security defaults exploited by the threat to perform cyber-attacks.

Then the cyber risks equation becomes RISK = IMPACT x THREAT x VULNERABILITY. This model is near of the classical fire triangle where the IMPACT is the FUEL, the VULNERABILITY is the OXYGEN (oxidizing agent), and the THREAT is the HEAT (energy of activation).

Each of these risk factors are generally owned by different organization’s entities:

- IMPACT is own by the business entities which alone can estimate the damage related to a cyber incident.

- THREAT is own by the security entity which alone can assess what its dangers are.

- VULNERABILITY is own by the different IS/IT operational entities which alone can deal with it.

Cybersecurity mainly consists of reducing each of these three factors. Obviously, due to the fast and massive business digitalization, the IMPACT factor will not decrease, quite the contrary. The THREAT being an external factor, we have very few ways to affect it, and it is increasing strongly for two decades (this will be the subject of another post). So, in the end, the only reliable means for an organization to deal with its cyber risks is to seriously manage its VULNERABILITY. And in a context where IMPACT and THREAT are massively growing, it is important to:

- Generate as less vulnerabilities as possible, by training people and using modern security practices like DevSecOps and Agile Security.

- Identify existing vulnerabilities by analyzing regularly all IT components through SAST/DAST/vulnerability scanner tools and penetration tests.

- Quickly fix identified vulnerabilities thanks to Evergreen practices.

And then you may avoid to face Cerberus at the gates of the Underworld ;)