Becoming Data Driven: Lesson learned (Part 2)

This article follows another post "How to become a Data Driven Company in 10 simple steps" which relate the steps we took in initiating our data driven initiative. When reflecting on our journey there are some take away, things we could have done differently and areas we are still exploring right now.

You better be an industry

One of our biggest strengths when implementing a data platform was to be an industry. We excel at creating tyres and we have a rigor in the process built in our DNA. It's in our culture to create repeatable processes and maintain quality over time.

The industry culture was a strong advantage when building our data platform as we were able to quickly scale our onboarding and component creation process.

I believe a media company for example would have had more difficulty to scale the platform as fast as we did but may have had other advantages we haven't.

Treat metadata as data

In the original article we started creating a network of data stewards and catalogued our data using an Excel Spreadsheet. In our journey we adopted an enterprise data cataloguing tool that hasn't had the level of adoption we were hoping for.

When we catalog our data we describe the table, its column and owner and link the enterprise data model. There is a mix between an automatic scan of different data lakes and declarative documentation that constitute the metadata of our data.

Something I feel I've missed is to treat all this metadata as data. It feels like this is the direction the industry is following when promoting a push based model that is advocated by Datahub where all this metadata is made available through API or Stream.

In this model, like we do with data, all teams producing data would be responsible and accountable for its metadata which will be published within the data lake to enable analysis and visualisation of it.

Another area we will be exploring is around data lineage, this is a fast moving field and we start seeing a standard emerging with OpenLineage aiming aligning lineage metadata producers and their consumers.

Engineering practice

I know some people in my organisation will read this, please don't take it the wrong way 😉, but I don't feel that we have invested enough in good engineering practice when building our various data products.

There are three reasons for this:

• The people that are building within the Corporate Data Lake have come from a more traditional BI & Analytics background with visual pipeline and SQL query. This is quite far from the developer culture where practices such as clean code, refactoring or test driven development have been around for some time.

• The tools that we have provided within the platform don't encourage the use of good development practice. For example the pipeline that is defined within Datafactory can only be created with visual tools and not through code. Another example is with the data transformation steps that are created within a notebook in Databricks and don't offer the same developer experience seen in a traditional IDE.

• Being in the cloud cut ourself from a lot of the on-premise tools that Michelin is offering for its developer community. A good example is with our main company source code repository not being accessible from the cloud, thus forcing us to use the tools offered within Azure.

Discussing with other companies, our case is not isolated and we start seeing a shift in the industry. The current Thoughtwork tech radar recognises this trend with an interesting paragraph called: "Engineering rigor meets analytics and AI".

I also don't think we should fight against the SQL language. I appreciate it can produce the most horrific and unmaintainable code I've ever seen, but it has the benefit of being well understood by a variety of people and to be operable within different technology. On this side I believe a tool like DBT would greatly help us to bring good engineering practice into the SQL world.

Data versioning

When we build a REST API it's relatively natural to support versioning, so we can support change to its schema over time and provide a transition path to its consumer.

This isn't a practice that we have seen adopted when producing data within our different data lake. This is however a must when schema changes are required without breaking consumers.

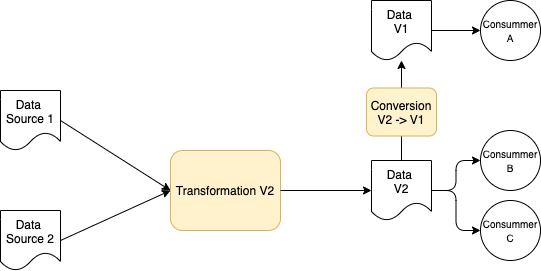

Following API versioning strategy we can propose the following pattern:

In this approach:

• A new data transformation "Transformation V2" has been introduce

• Consumer B and C have migrated to the new format

• A new task convert from the new format to the old one "Conversion V2 -> V1"

• This task supports consumer A which can migrate at its own pace

As we are maturing I hope we start seeing a better adoption of data versioning within our different data lake.

Data Quality

Too often we see consumers of the data finding issues related to the quality of the data where this should be a responsibility of the producer.

We've seen isolated initiatives to validate the quality of the data at producer and it's only recently that we took the initiative to standardise on the tooling.

It seems that the framework Great Expectations is emerging as a standard and we are packaging the tools to simplify its adoption in our ecosystem.

Avoid custom architecture

If you are following the first part of this article you should remember that we've created a platform with a set of standard tools aiming at addressing most of the data and analytics use cases.

What do we do when the standard set of tools that we have put on shelves don't quite address a particular use case? You really have two options:

• Introduce exception in your architecture

• Simply say you cannot do it

As solution architects our role is to find solutions to problems and we would rather introduce exceptions to the architecture than simply say no. There have been a few times where we took the first option and regretted it.

Have the courage to say no, when a particular use case doesn't fit your platform. When you see more of this type of use case and is no longer an exception, you can then incrementally adapt your platform to support it.

Deal with disaster risk early

Your data is stored within one of the cloud storage solutions with a guarantee of 99.999% resilience provided by data being replicated 3 times within one geographical zone, then replicated to a separate geographical zone where the data is again replicated 3 times.

This seems enough right? No! Delete by mistake the precious consolidated sales data, and it will automatically get deleted 3 times within one geographical zone, before the deletion gets propagated to the other geographical zone where the data will be again deleted 3 times.

Being in the cloud doesn't prevent human failure or cloud provider failure. You need to protect yourself from data lost using backup or with the ability to reconstruct data from its sources. You also need to be able to automatically rebuild your infrastructure from code.

Quotas and scalability

Let's face it, the different cloud platforms were not designed to build platforms within it. At least not when we started with Azure and our current architecture.

The cloud provider will create all sorts of quota and safeguard to prevent their customer from making a mistake. A good example is the number of CPU available in a given subscription that is by default limited to 250 which is a pretty sensible safeguard. However when you build a platform where you might host hundreds of different analytics use cases, the CPU quotas won't make sense for you.

We realise some of the quotas can be simply increased but others are hard limits that cannot be changed. Search which resources have quota within your cloud provider that might impact you, monitor how much you are using them and get prepared to change your architecture to mitigate them.

Data Mesh

The architecture we have put in place with a central platform and decentralised team follows some of the practice advocated by the Data Mesh approach.

The concept didn't exist when we started building our platform and when we started hearing about it we took on board some of its key practices. There are still some technical aspects and organisational ones we need to address.

The Data Mesh comes with a definition of what is a data product and describes different aspects: Discoverable, Addressable, Documented, Reliable, Interoperable, Secure. Those are specific aspects related to data and we shouldn't forget the general characteristics of a Product such as community of usage, long-lived team, lifecycle... As we are grasping those concepts we also need to define how we adapt them in our data ecosystem.

On the organisational part, as we try to group different data products by domain and create clear boundaries we also need to find an alignment with the different business streams around those domains. This should allow better channel exchange between those streams, reduce friction and generate value.

Semantic Layer

There is a lot of discussion around the need of a semantic layer and we see some tools and practice currently emerging.

When building metrics out of our data for business we realise that they are defined in different layers of the architecture, even sometime in the reporting layer. We also realise that often the same metrics are computed multiple times with different definitions. This causes confusion with our users and we see data engineers wasting time figuring out what data they need to use.

We are not the only ones facing similar issues and to solve a similar problem Airbnb built Minerva which is behind discussing in great details in those post1, post2 and post3. In essence the aim is to centralise the different metrics definition and relation between dataset in a central location.

The semantic layer topic is moving extremely fast and this article gives a good summary of the current state.

Some of the tools we see today are: Cube, LookerML, MetricFlow, Metriql, AtScale and Dremio / DBT.

It's not easy to navigate between those different technologies and their different pros and cons. Today we are taking the Dremio / DBT approach, so let's meet in a few month to discuss where we are 😉.

Conclusion

Our journey started 5 years ago and looking back I am amazed by the astonishing technical progress done in our industry in such a short time frame. Back then we were not able to incrementally update a file in a data lake or bridge the gap with real time data. We still have a long journey ahead but with AI, Data is probably one of the fastest moving fields within IT. There has never been a better time to become a Data Engineer or a Data Scientist, so come and join us.