CI/CD-as-a-Service

What a pity to copy/paste ".gitlab-ci.yml" across repositories. We all started like that and it doesn't matter! But now we should switch our mindset to simplify & re-use our jobs. This article is a feedback of what we took away from a new way of developing our GitLab CI/CD.

What a pity to copy/paste multiple lines of .gitlab-ci.yml jobs across multiple repositories. We all started like that and it doesn't matter!

But now we should do like we did when we learnt programming: simplify & re-use.

This article is not a documentation or a tutorial, it’s a feedback of a journey we did and what we take away from this new way of managing our GitLab CI/CD on multiple projects.

Introduction

GitLab CI/CD is one of multiple ways to do CI/CD. It’s composed by pipelines with sequential or parallels jobs (with execution conditions).

Commonly described in .gitlab.yml files. The simple and widely used structure is composed by two parts:

- settings (stages, variables…)

- jobs

In this article we will take a deeper look into the main part: jobs.

We will see how to improve efficiency when scaling-up the number of projects/repositories.

A simple job looks like:

build-job:

stage: build

before_script:

- echo "I do all the pre-requist stuff before really doing the task"

script:

- echo "I do my job here"

But when you are using multiple runners, docker mode, environments…

The job description starts to growth and give this kind of thing:

deploy-staging-job:

stage: deploy

image:

name: docker-registry.acme.corp/deploy-toolbox:1.2.0

entrypoint: [""]

needs: image-builder-job

before_script:

- echo "Pre-requist stuff before really doing the task"

script:

- echo "I do my deploying job here"

interruptible: no

tags:

- my-tag

environment:

name: staging

url: https://staging.my-application.acme.corp

only:

refs:

- stage

I spare you the multiple script lines to really do something inside the job…

And this is only one job... a complete CI/CD is often composed of dozen!

As you understood and probably already experienced, it quickly gives an unreadable and not very flexible ~500 lines of YAML file.

Start small

Some little features to promote code reuse, even if you are only using CI/CD on a small scope (one project for example):

-

Hidden/disabled job [doc]

Simply put a dot

.in front of your job name to disable it. It's useful for the two next points. -

YAML anchors and aliases [doc]

Reuse part of your YAML code.

-

Job extends [doc]

Extends a job from another. It's also possible to extend from a disabled job. It can be considered as inheritance.

(GitLab specific)

With this kind of tips, you can have a ~100 lines YAML file. Most of jobs are factorized, it’s always difficult to read and to manage your execution workflow (this word will be important!). Nevertheless there is less... no duplicated code, that’s great!

Thus now, how to improve all other similar projects/repositories with our brand-new factorized CI file?

Think Big

When scaling-up the number of project to manage, instead of copying the same code inside all .gitlab-ci.yml of each project, and in reality, it's not only a full copy but also adding small modification for each specificities of the project... The idea is to start consuming “common” jobs!

So, we will change our mindset to only think about the real need, what we want to run and when we want to run.

• The foundations

We will centralize jobs and only consume them into the different projects.

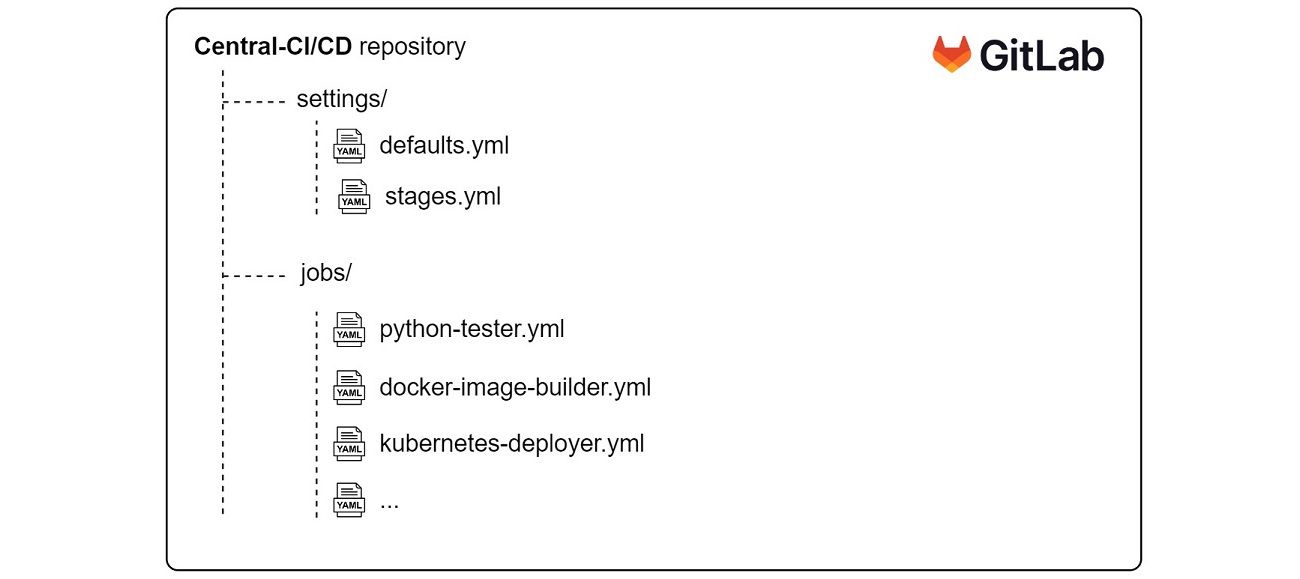

In our common-CI/CD repository we will have a bunch of .yml files with jobs inside.

In our applications repositories, we will have a simple .gitlab-ci.yml which consume the central jobs.

We will see this later. We had to do some improvement on our jobs before consuming them.

• Mindset

When using the same job on multiple projects, a key element is that all projects are constructed quite the same way (same folder architecture for this, same name for that...). Let's be honest and consider that it will never be totally the case, so... variabilize it!

In this step, this is the most difficult but most valuable thing we will bring to the job is genericity.

Identify what the job should do, and what should be configurable. Also based on the experience you had with all projects, define common standards or good practices, and put it as default value for your variables. The goal is for a standard project to have less variable as possible to configure.

Caution: be careful when you choose variables names, it will be very costly (in time) to change it once many project will be consuming your job.

Some concept to keep in mind during this phase (links will give you the full principle for each):

- SOLID: a single responsibility per job, stay open for extension...

- KISS: keep it simple

- DRY: avoid redundancy

• Guide-lines

- Additional arguments

You cannot think to all case, so allow the consumer of your job to add additional arguments to your command lines.

[...]

variables:

PYTHON_TEST_DIR: "tests/"

script:

- pytest $PYTHON_TEST_DIR

Becomes:

[...]

variables:

PYTHON_TEST_DIR: "tests/"

PYTHON_TEST_EXTRA_ARGS: ""

script:

- pytest $PYTHON_TEST_EXTRA_ARGS $PYTHON_TEST_DIR

- Verify inputs

In before_script:, always verify and log (if not sensitive of course) all your variables. It will be helpful for later troubleshooting.

Example :

[...]

before_script:

# verify mandatory variables (break if not present)

- test ! -z "$MANDATORY_VAR" && echo "$MANDATORY_VAR" || exit $?

# log facultative variables

- echo "$OPTIONAL_VAR"

Note: you can go further by also checking if the value respect some conditions, for example, a variable that define a non-privileged network port should have a value be between 1024-65536.

- Variable execution

You do not always know when your generic job will need to be triggered. You can use variables to define your job execution, it will be key to simplify the orchestration.

[...]

rules:

- if: '$JOB_EXEC_K8S_DEPLOY_APP == "auto"'

when: on_success

- if: '$JOB_EXEC_K8S_DEPLOY_APP == "manual"'

when: manual

- if: '$JOB_EXEC_K8S_DEPLOY_APP == "skip"'

when: never

# default

- when: never

With this definition, the consumer of this job will be able to pilot the execution of the job with a variable. Combined to the workflow: keyword it becomes easy to compose your pipeline and orchestrate jobs as your convenience.

We will get into job orchestration in more depth later.

• Example

Here is a simplified example of a generic job kubernetes_apply.yml:

variables:

# Default values

K8S_MANIFEST_PATH: 'manifest/kubernetes'

K8S_APPLICATION_NAME: ${CI_PROJECT_NAME}

kubernetes-apply-job:

stage: deploy

image: docker-registry.acme.corp/deploy-toolbox:1.1.3

before_script:

# verify mandatory variables

- test ! -z "$K8S_KUBECTL_CONFIG" && echo "$K8S_KUBECTL_CONFIG" || exit $?

# display facultative variables

- echo "$K8S_MANIFEST_PATH"

- echo "$K8S_APPLICATION_NAME"

- echo "$JOB_EXEC_K8S_DEPLOY_APP"

# display version of tools to facilitate troubleshooting

- kubectl version --client=true

script:

# deploy application to kubernetes using manifests

- kustomize build "$K8S_MANIFEST_PATH" | envsubst

| kubectl apply -f -

tags:

- my-runner-tag

environment:

name: $ENV_NAME

url: $ENV_URL

rules:

- if: '$JOB_EXEC_K8S_APPLY == "auto"'

when: on_success

- if: '$JOB_EXEC_K8S_APPLY == "manual"'

when: manual

- if: '$JOB_EXEC_K8S_APPLY == "skip"'

when: never

# default

- when: never

As you can see in the code, this generic job takes 4 variables:

- 1 mandatory

- K8S_KUBECTL_CONFIG

- 3 optional (because of default values)

- K8S_MANIFEST_PATH

- K8S_APPLICATION_NAME

- JOB_EXEC_K8S_APPLY

Thus, for a standard project, only K8S_KUBECTL_CONFIG (used to define Kubernetes credentials) will be needed. This job can be consumed quite easily and is enough generic to be usable for existing project with other folder structure for example.

Note: it’s a very simplified example to give an idea. A real generic job to apply on Kubernetes will take more variables and verify inputs deeper.

Scale fast

Our generic jobs are there, now let’s consume this CI/CD-as-a-Service in our projects!

We will only use two GitLab-CI/CD keywords:

With the JOB_EXEC_* variable, we can choose easily when deployment occurs (on which branch for example) and if it's automatically launched after success tests, or if it's a manual action.

Our .gitlab-ci.yml for projects look like this:

include:

- project: /acme/tools/common-ci-cd

file:

- settings/global.yml

- jobs/python-workflow.yml

- jobs/k8s-workflow.yml

variables:

# by default we will execute lint/test/deploy automatically

JOB_EXEC_PYTHON_LINTER: auto

JOB_EXEC_PYTHON_TEST: auto

JOB_EXEC_K8S_APPLY: auto

workflow:

rules:

# each commit on develop branch will deploy to staging environment

- if: $CI_COMMIT_BRANCH == "develop"

variables:

ENV_NAME: staging

# each commit on main branch will deploy to production environment

- if: $CI_COMMIT_BRANCH == "main"

variables:

ENV_NAME: production

# override the default value to trigger the deploy job with a manual action

JOB_EXEC_PYTHON_LINTER: skip

JOB_EXEC_K8S_DEPLOY_APP: manual

This time it's not a simplified version however it remains quite easy to read! Our .gitlab-ci.yml is now composed by three very simple parts:

- inclusion of centralized settings and jobs

- definition of variables (could also been done directly in GitLab project settings)

- scheduling using

JOB_EXEC_*variables

Great things take time

The first value we searched with this project was for our R&D entity. We wanted to simplify and standardize the management of all GitLab CI/CD in spared projects (around 40). It's a great success for existing and new projects because most of them were constructed in a quite similar way and now we have <50 lines of YAML to describe the full integration & deployment process. For new project it's included in templates so anyone is able to consume the centralized CI/CD.

We have an easy readability (even for non CI/CD specialists), an handy control of the execution for each project with a common base of 15 generic jobs. It's now very easy to start new project with an understandable CI/CD and to keep it up-to-date on existing projects. We also have a dashboard with the all the statistics of our centralized jobs usage.

On another side, exactly when you are maintaining a service, framework or functions, you need to test and take care of the feature you want to deliver. What is the purpose of each job, what are the input and the expected output. Changing it without consideration will cause many disruption in your delivering pipelines.

When CI/CD is centralized, it should be considered as a development project with all the constraints. Yes it can be very convenient, but it can also become a pain to use if it's too complex, not enough generic or not stable after updates.

Now we are in the step of opening this for innersource inside Michelin. There is a lot of new use-cases to manage but it will nuture reuse and tool standardization.

• Opening

It was just a quick overview of this initiative.

Many topics have not been detailed and could worth their own part or even a blog post:

- documentation

- for consumers

- for contributors

- concurrent versions delivery and maintainance

- manage the transition between new releases and deprecated versions when big/breaking changes occurs

- automatic testing at different levels to avoid impact on project that consumes the centralized CI/CD

- linter to track syntax issues

- testing projects to track bugs and continuity of service

- metrics & traces

- using an API and an anchor in the

after_script

- using an API and an anchor in the

• More resources please

Some good links to go further:

- GitLab Documentation - guidelines for CI/CD

Good tips & tricks to improve your CI/CD development.

- GitLab Documentation - Keyword reference for .gitlab-ci.yml

The exhaustive list of keyword and their parameters to catch 'em all.

- GitLab Documentation - "rules" keyword

A very powerful keyword to improve your job triggering.

- Repository - Job templates

C++, Flutter, Go, Maven, PHP, Python, Terraform a lot of example jobs to inspire yours!

- Blog post - Let's make faster GitLab CI/CD pipelines

Going from 14 to less than 3 minutes pipelines in no more than 7 iterations.

- Project - to be continuous

An open-source project with a larger ambitions but same philosophy.

If DevOps at scale is interesting you, feel free to contact us!