Open Sourcing Qlik Enterprise Manager Ansible Module

Michelin Engineering team is pleased to announce Qlik Enterprise Manager Ansible Module in open source. Discover how our teams use this Ansible Module permits improve the speed and quality of Qlik resources deployment.

Introduction

In this blog we have been mentioning a lot Qlik Replicate as a lever for Michelin modernization initiatives. The following lines explain how we have automated the Qlik resources management to improve the operational quality, speed up delivery and scale the deployments.

What is Qlik Enterprise Manager?

Michelin use 2 main components from the Qlik Data replication suite:

- Qlik Replicate: A Change Data Capture solution to copy (in near-real time) data from a source (DB, file, etc.) to a target (DB, file, messaging systems, cloud, etc.). Read Legacy integration, a story of modernisation for more information.

- Qlik Compose: An Extract-Transform-Load solution targeting Data Warehouses.

In large organization, you will likely need more than one instance of Qlik replicate or Qlik Compose. In order to pilot a set of replicate/compose servers, Qlik proposes Qlik Enterprise Manager.

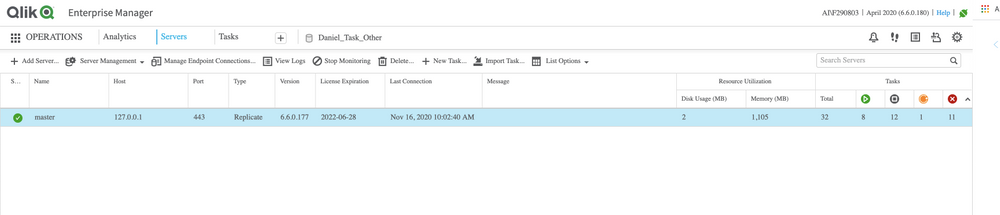

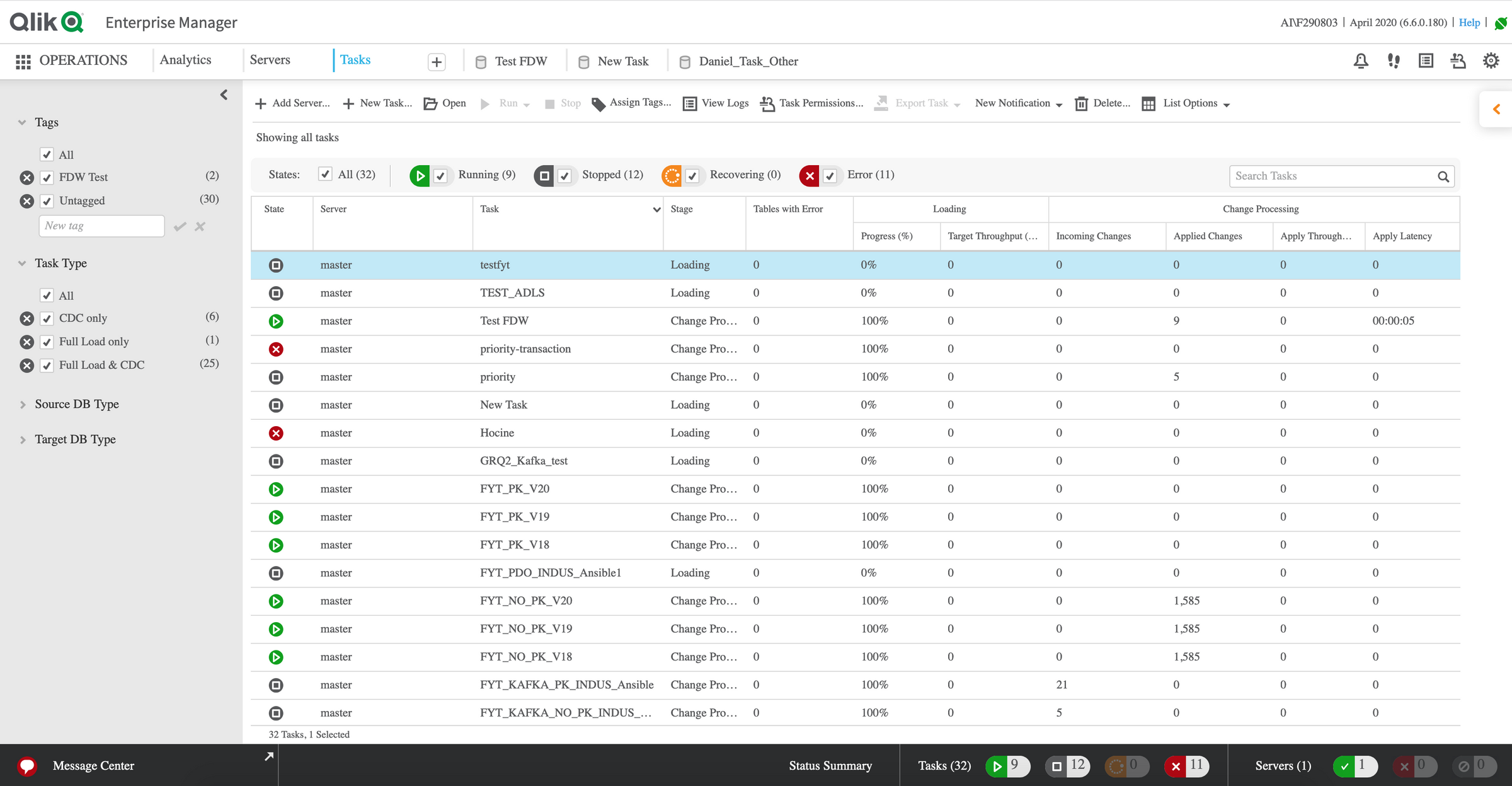

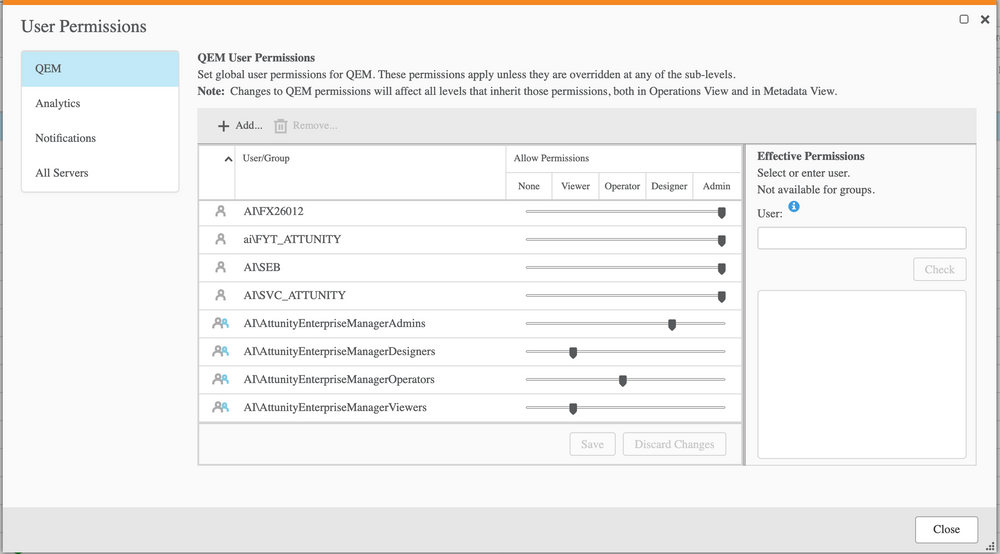

Qlik Enterprise Manager (aka. QEM) is a "Command Center" web application to manage the following resources (at scale):

Server

Task

Access Control List (ACL)

On top of consolidating everything, QEM proposes a REST API and here is where our automation journey starts.

How to automate Qlik Enterprise Manager resource Management?

Qlik Enterprise Manager API provides programmatic interfaces to perform tasks typically executed via the Qlik Enterprise Manager web console such as:

- Login/Logout

- Register a Replicate/Compose server

- Import a task via a JSON definition

- Start/Stop a task

Lets take an example:

#Retrieve a QEM authentication token

curl --request GET \

--header 'Authorization: Basic B64(DOMAIN@USER:PASSWORD)' \

https://QEM_SERVER/attunityenterprisemanager/api/v1/login

#Import a task

curl --request POST \

--header 'EnterpriseManager.APISessionID: MY_QEM_TOKEN' \

--header 'Content-Type: application/json' \

'https://QEM_SERVER/attunityenterprisemanager/api/v1/servers/my_replicate_server/tasks/my_task?action=import' \

--data '{ MY_TASK_DEFINITION_IN_JSON }'Thanks to this API we could build shell scripts to automate Task, ACLs and Server deployment. Looks nice right? Wait there is more!

If you are not comfortable with raw HTTP calls, you can use the .Net client or Python client Qlik bundled with QEM installation.

Thanks to these clients you can write scripts like:

from aem_client import *

aem_client = AemClient(

b64_username_password,

qem_server,

verify_certificate=True

)

aem_client.import_task(

payload="{ MY_TASK_DEFINITION_IN_JSON }",

server=my_replicate_server,

task=my_task

)At Michelin we decided to go one step further by packaging common actions into an Open Source Qlik Enterprise Manager Ansible Module.

Qlik Enterprise Manager Ansible Module

Michelin IT is really proud to announce we have open sourced the Qlik Enterprise Manager Ansible Module to manage Qlik Replicate/Compose resources via Ansible.

Ansible is a well-known open-source automation platform. Pilar of Michelin's "Infrastructure As Code" strategy, the solution permits actions like machine provisioning, middleware deployments or configurations. Written in Python, Ansible can be extended via modules to bring new capabilities.

Michelin IT teams used the QEM Python client described above to build custom actions. This allows teams to manage the common QEM resources (Server, Task, ACL, etc.) with the usual Ansible semantic.

The following Ansible playbook showcase how you can manage a Qlik Replicate task:

---

- hosts: localhost[0]

connection: local

gather_facts: False

roles:

- role: qlik.enterprise-manager # load the Ansible module

tasks:

# Removing a task

- name: Delete sample task

qem_task:

name: "My Sample Task"

server: "My Sample Server"

state: absent

force_task_stop: yes

# Importing a task based on JSON task definition

- name: Import sample task

qem_task:

name: "My Sample Task"

server: "My Sample Server"

state: present

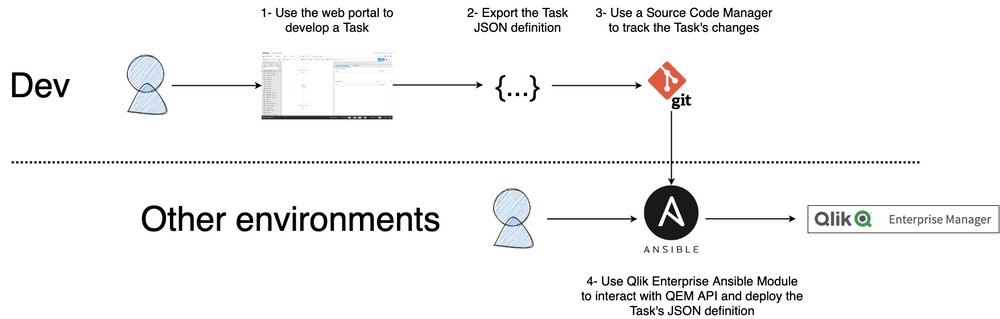

definition: "{ MY_TASK_DEFINITION_IN_JSON }"Cool, right ? However, an automation tool alone is not enough, it's a lever of an organisational objective. At Michelin (like many other companies), we consider that a human having a direct production access is an anti-pattern (manual actions are not traced hence not repeatable) . In order to enforce automation, we proposed the following workflow for Task development:

In this workflow, the QEM Web Console acts as a development (i.e. risk-free) environment. Once a developer is done with its task definition, it exports it and versions it as any other source code. This step permits to track the task's changes and easily compare versions or even do rollbacks.

On the deployment side, the Qlik Enterprise Ansible Module will be fed by the Source Code Manager (Git, SVN, etc.). This setup allows to build a promotion pipeline. At each stage (QA, Pre-Prod, etc.), the same task definition is deployed against a different target environment where tests can be ran (performance, integrity, etc). If the tests passed then the task is promoted (ie. deployed to the next stage until either reaching the production) else sent back to development.

Conclusion

The Qlik Enterprise Ansible Module improved Michelin's teams autonomy while increasing the overall deployment quality and speed.

We are ver proud to announce we have open-sourced Qlik Enterprise Ansible Module. You can find a complete description of the available capabilities on the project's documentation.

We're looking forward to your feedbacks!

This article was writen by Daniel couple of weeks back and since then he started a new journey within the Confluent company where he will, we are sure of it, help many of its futur customers to embrace the Kafka technology towards an event driven Information System. We take the opportunity of this last article to recognize the tremendous work performed by Daniel around Jenkins (when we used for our Continuous Integration tool), the digital manufacturing initiative with Osisoft PI and a datalake and recently in the adoption of Kafka at Michelin. Thank you Daniel and we wish you the best. You can follow Daniel's adventures, here