Learn EDA & Streaming from practice

You hear more and more IT colleagues talk about event-driven architecture and stream programming, you understand this is the new paradigm shift of IT system design in company, but you might wonder how to grasp the key concepts quickly and most important how to apply them in your own products, projects?

That was my question back to beginning of this year, Feb 2020 when I was introduced to these terms and highlighted the importance in cloud-native application architecture.

Thanks to the time flexibility during the Cov19 lock-down, I read the book “Designing Event-Driven Systems” from Ben Stopford. As Ben works in CTO office of Confluent, you can image his book is based on the implementation in Kafka. It is a very enlightening book. It convinced me that EDA, streaming are good architecture for many scenarios and Kafka is good product to implement the architecture.

However, it did not give me much confidence how easy it is to adopt the technologies and ‘insert’ them into our existing environment. Base on my experience, the best way to master new technologies is to practice, start from real cases, and try it yourself step by step.

Planning

Obviously, the core component is Kafka. For personal learning, single broker cluster is good enough. And it is straightforward to install Kafka on PC, either by installer or Docker image.

Then I need a source to generate stream data feeding Kafka. Of course, I can use a simulation script, but it could be more interesting to use a sensor to collect signals from physical world. This also links my tests to the hot IoT topic.

I want to show the stream data in a realtime dashboard. The most reachable candidate is PowerBI.

And from my previous experience of Azure services, I know by using Azure Stream Analytics Job and Azure Event Hub, I can easily store, extract, proceed stream data and export to PowerBI dataset.

To transfer data from local Kafka to Azure, I prefer using Kafka built-in features, like mirror-maker. Fortunately, Azure Event Hub standard supports Kafka end point.

So, it seems not too difficult to get all the main components and put them together into an event-driven streaming application, collecting signals and showing in realtime, like a sound dB meter.

Architecting

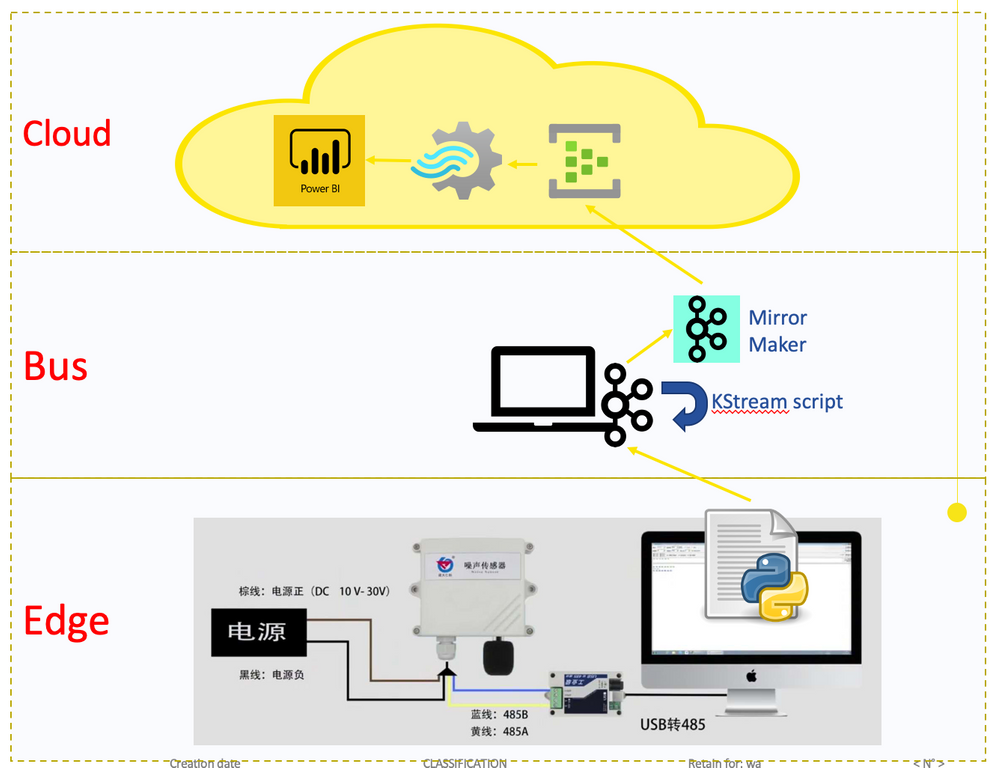

As an architect, the most exciting part is drawing pictures, sorry, the architecture diagrams. :-)

I will attach the sensor to my Windows PC, which retrieves the signals from the sensor to send to remote Kafka. This is the edge layer.

Kafka is installed on my MacBook. It receives the data from edge and forwards to Azure. I call it Bus layer.

And I want to play a little stream processing within Kafka. The Azure and PowerBI (SaaS on Azure) is the cloud layer.

With these component, I have the architecture as below.

Preparation

After validating above idea with some quick tests, I started to prepare the devices and cloud resources.

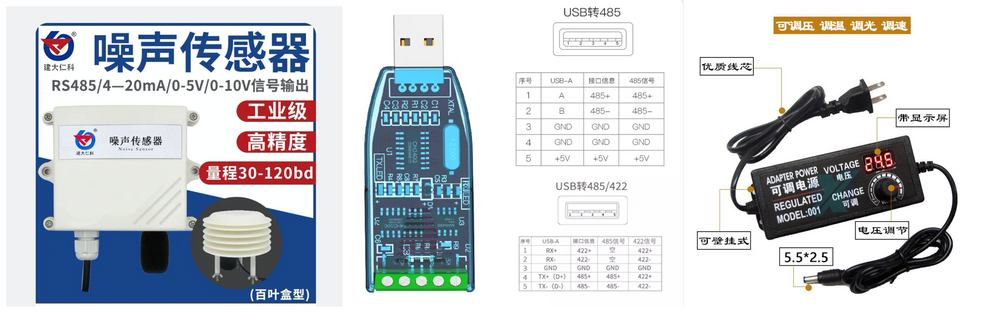

The first step is to buy the sensor and connector to PC. There are bunches of choices in the online shops in taboo.com. I list below the pictures and prices.

Then, in Azure, I create the dedicated resource group and Event Hubs Namespace to host the services to be created. It worths to mention the mapping between Azure Event Hub and Kafka.

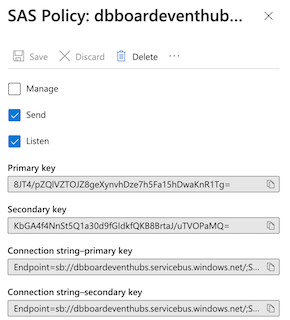

On the Event Hubs Namespace, create a Shared Access Policies with Send and Listen rights. The connection string and keys will be used by Kafka Mirror-Maker to send data. As in my test, I do not need topic based control.

Setup

Now it is time to build the system piece by piece.

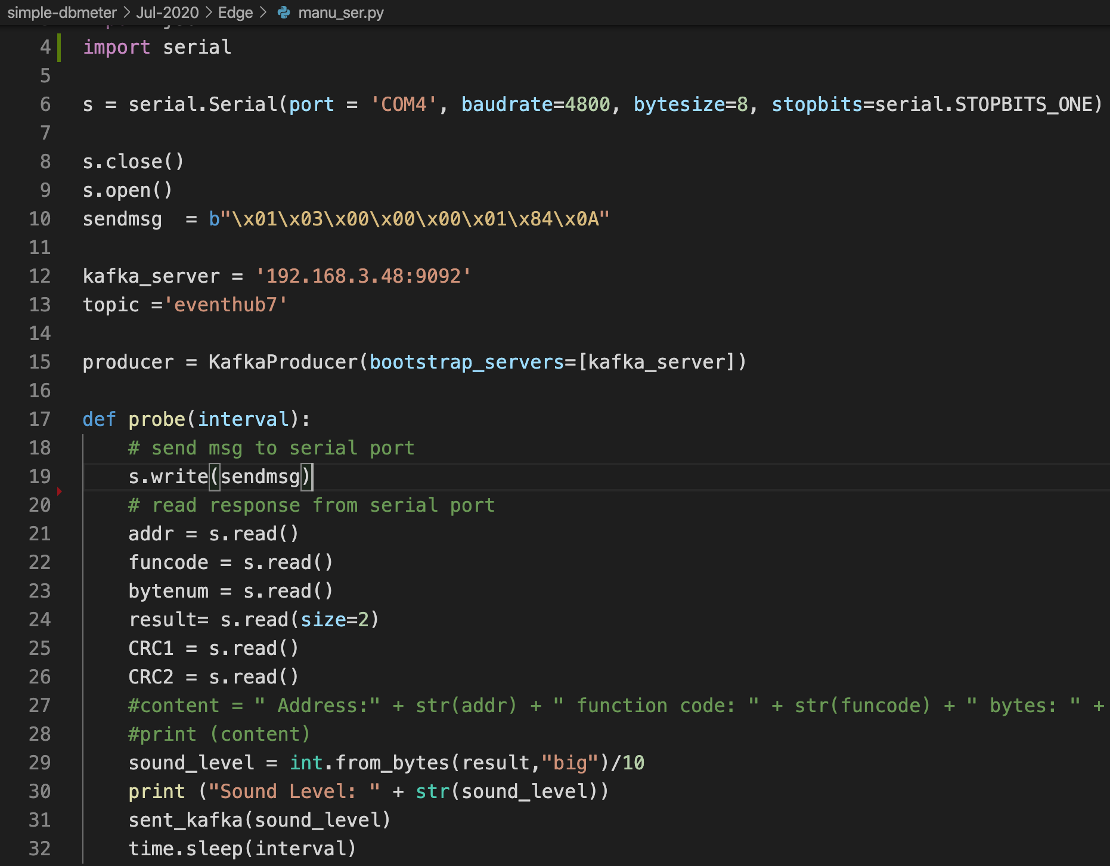

First, via RS485-USB convertor, I connect the sensor to my PC. Confirm the devices are working well with the self-diagnosis tool from the vendor. Then, refer to the user manual, I need send a specific request to the sensor and read result at specified address in response. I use following Python script to get it done.

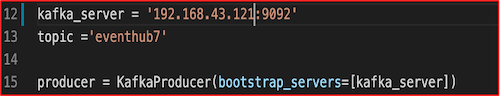

On my MacBook, I install Kafka with home brew. Update the Kafka server.properties file with appropriate listener port, something like advertised.listeners=PLAINTEXT://192.168.43.121:9092Then start the zookeeper, Kafka server, and create the topic, named eventhub7. With built-in Kafka-console-producer and Kafka-console-consumer, I can confirm the Kafka is running and can receive message to topic eventhub7.

Back to the PC, in the same Python script, I add lines to post received result to the new created Kafka topic eventhub7.

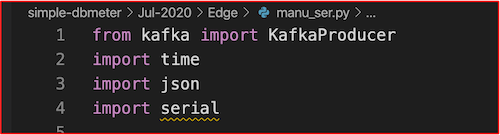

Import the Kafka producer module.

Hardcode the Kafka server IP, port and topic name.

Format the result into JSON format and send to Kafka. It is put in an infinite loop and executes every 1 second.

At this step, from Kafka-console-consumer, I am able to see messages continues add to eventhub7.

Now, it is time to push data onto Azure cloud.

First, I create the Azure Event Hub in the Event Hubs Namespace. To allow data replication with Mirror_Maker, the name must be the same as Kafka topic, i.e, eventhub7.

Then, on MacBook, I configure the Mirror-Maker. For the consumer parameter, in source-kafka.config, ensure "bootstrap.servers=127.0.0.1:9092” For the producer parameter, in mirror-eventhub.config, Set the bootstrap.servers = your EventHub URL:9093 and reeplace the password field with your EventHub connection string, explained above in the preparation step.

Use this command to start the micro-maker, kafka-mirror-maker --consumer.config source-kafka.config --producer.config mirror-eventhub.config --whitelist="eventhub.*"

After these done, in Azure Event Hub eventhub7, using the Process data menu, I can see live data come in.

Let’s finish the setup on cloud side.

First, the Stream Analytics job,

- Create a Stream Analytics Job.

- Define the Inputs, as eventhub7.

- Define the Outputs, as a PowerBI dataset in selected workspace. In my case, it is my Teams space HITS.

- Define the Query to generate the expected fields into the datasets

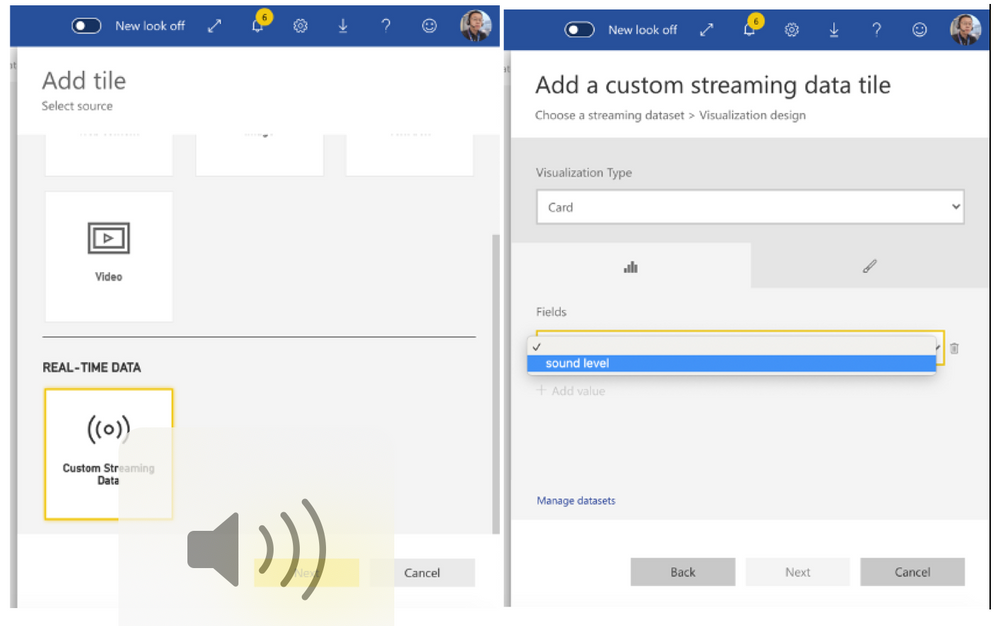

Then, the PowerBI dashboard,

- Goes to the workspace, HITS

- In the Dashboards tab, create the dashboard following the screen instructions.

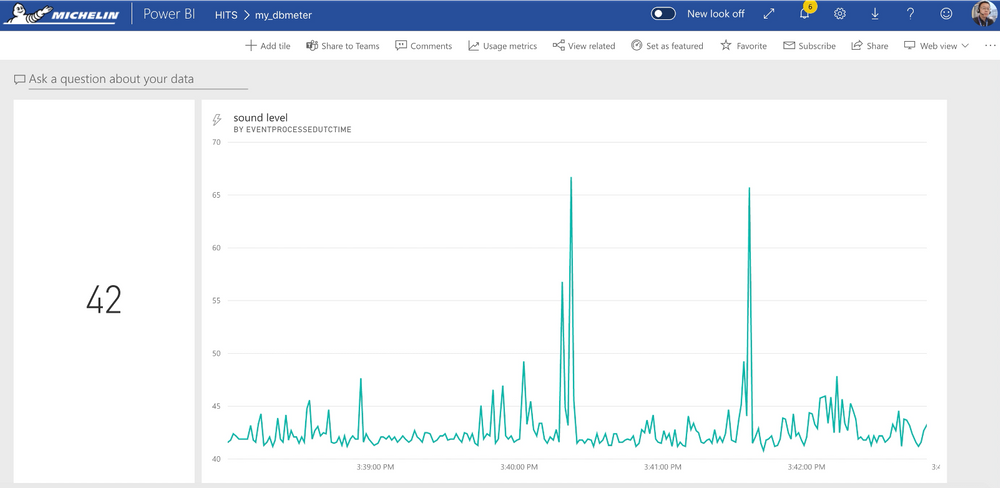

Bravo! As soon as you add the tiles in the dashboard, immediately you get a live board as below.

Kafka Stream

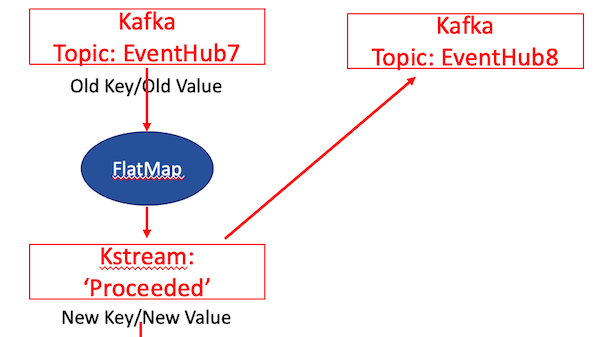

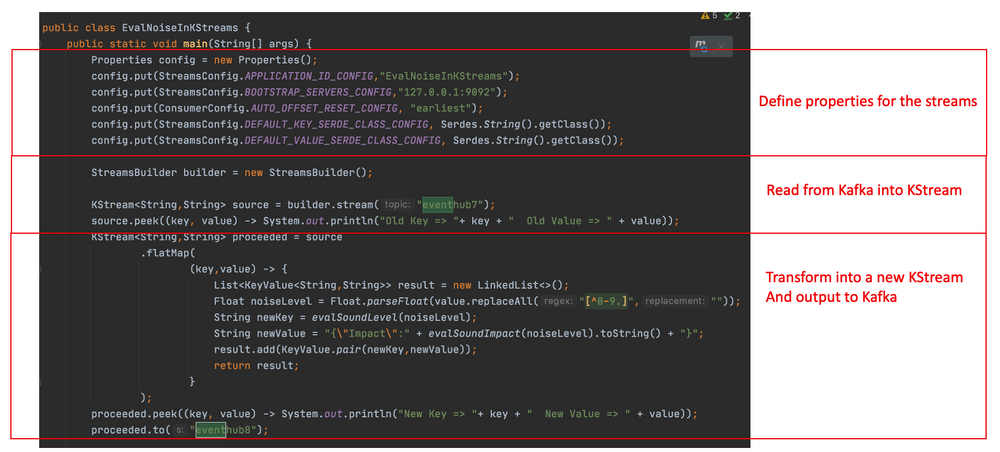

To play a little more with the stream data within Kafka, I decided to use built-in DSL functions to convert the dB value to noise impact level. The log is as below

The Java code is like below

You can see the result is post into new topic, eventhub8.

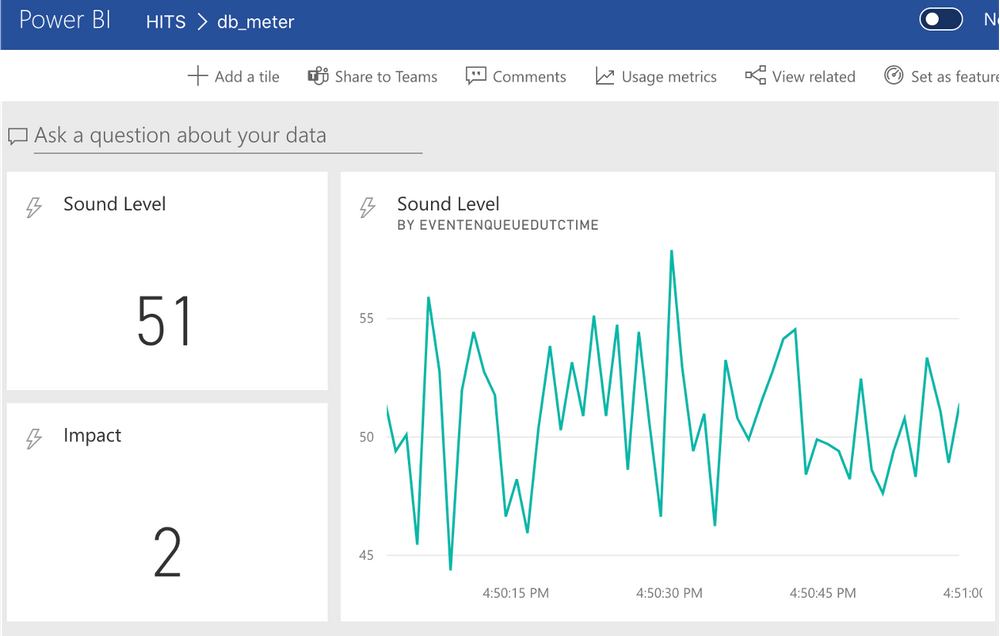

Refer to the above mirror-maker command, you can see I configure it to replicate all topics start with ‘eventhub’. So, follow the same approach as eventhub7, I create a new Azure event hub, called eventhub8, update the query of Stream Analytics job to extract data from eventhub8 and export to another PowerBI dataset.

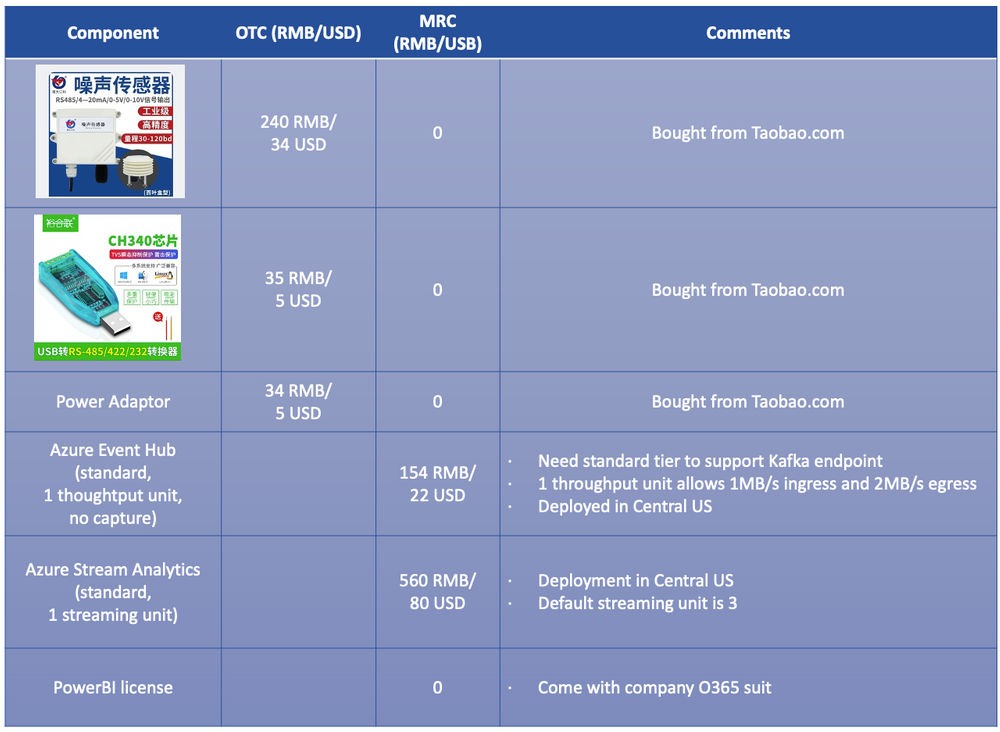

Cost

The cost of hardware and Azure services used are listed below.

And it took me roughly 2 months from the idea to the final run-able codes.

Code & Configuration

Afterthoughts

As a learning practice, I purposely use new components as much as possible. So the journey is bumpy and sometimes stuck in one task for days. In such situation, I talked to peers, searched courses in Udemy, video on YouTube and sample codes from Github, usually these help me find the solutions or at least workarounds. I can see how a good technician network, community internal and external is critical for technical experts.

With the practices, I understand better the challenges application team could face, especially when building event-driven streaming application across local and cloud. This is for sure important and helpful when I consider the topics in my own field, hybrid computing.

In the Brownbag this month, I shared this case with my Asia IT colleagues. After the session, several people told me that it is more clear to them what Kafka is and what it can do. So, real life case demo is a good way for IT experts to promote new technologies in our organization. We should do it more.