Securing a Virtual Desktop in a Public Cloud - Conclusion

As I documented in previous articles we moved our VDI platform to dedicated subscriptions and segmented the users by separating Michelin and Non-Michelin. On this last leg of our journey we look to implement the final step in securing our Virtual Desktops in the cloud by implementing a form of micro-segmentation. Many network and security vendors have this defined somewhere in their portfolios with different wording but essentially it is only allow connectivity from the workload to what is required and nothing more. Here is how VMware defines it.

Options

After segmenting the two environments from each other (Michelin and Non-Michelin) the next step as I mentioned was to provide micro-segmentation. This could be done a couple of ways, either with Network Security Groups (NSGs) native in Azure or via VMware's Northstar (or NSX+ as it is being referred to now) platform. Northstar is NSX as a Service from VMware hosted in VMware's cloud. Pro's and Con's for each from my point of view are listed below:

Northstar (NSX+)

PRO:

- Separate NSX management console

- Known and used today (NSX)

CON:

- Not ready for Public Cloud

NSGs

PRO:

- In use today

- Well known

CON:

- Can be difficult to manage

Unfortunately, due to the fact that NSX+ just isn't ready yet in the public cloud, we needed to proceed forward with the NSG implementation to bring a level of microsegmentation to our platform that was desired. In order to test this we chose to implement a new subnet on our test platform. The purpose behind this was twofold. First it gave us a 'safe' area to test and play that would not impact other testing on our test platform. Second, it created an environment that when NSX+ is ready for beta testing we could install a Public Cloud Gateway (PCG), which is needed by NSX, in the subnet with no worries of impacting anything else.

We already have NSGs setup and pinned at the subnet level for simpler management. In order to provide a level of microsegmentation we needed to implement NSGs at the NIC level of the vm (Actually we are doing this at the pool level). To do this we needed to create a 'base' NSG that all desktops would get and would not conflict with the NSG on the subnet. This base NSG allowed just enough flows for the desktop to be built and the user to connect.

It's important to note that the team needed to understand flows and the order of processing that would happen with NSGs at the NIC and subnet level. Outbound flows from the desktop the NIC NSG would processed first followed by the subnet NSG. For inbound flows to the desktop the subnet NSG would be processed first followed by the NIC NSG. You need to be sure that you understand this well or you could end up with conflicting rules and your flows will not work.

NSG Setup

Base NSG

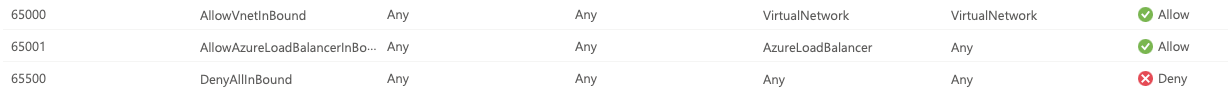

The base NSG needs to contain the necessary flows to be able to provision and manage the VDI desktop from Horizon Cloud. We modified the Horizon default template slightly. We kept the 65000 rules as shown below:

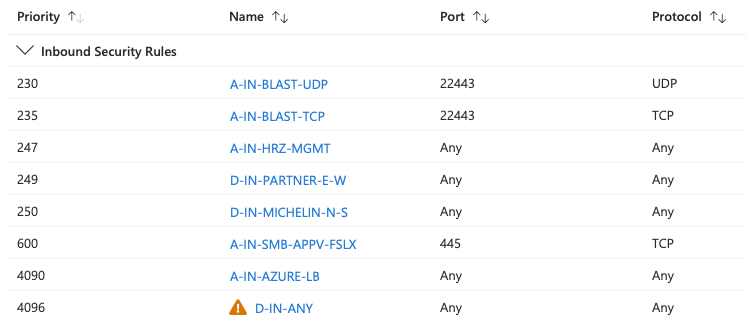

These 65000 rules exist on both Inbound and Outbound rule sets. Inbound we added the Blast and PCoIP rules in our naming format and discarded the tcpsidechannel and usbredirect. Our complete Inbound rule set is shown below:

The above Inbound rules allow the user connectivity to the virtual desktop after properly authenticating. As you can see it's quite simple.

Note: The screenshot shows Blast and PC over IP (PCoIP). It is known that PCoIP is being deprecated from the VMware Horizon solution. See this for more information https://blogs.vmware.com/euc/2023/03/announcing-end-of-support-for-pcoip-in-vmware-horizon.html

We implemented rules on Inbound and Outboud that block East-West traffic (this is also in the NSG at the subnet level) and we block subnet to subnet. I would show those but after blurring out the IP address ranges they would not be understandable by anyone outside of this effort.

Now we needed to ensure that Horizon control plane has visibility to the desktop. We duplicated the rules that exists on our subnet NIC and included it here on both Inbound and Outbound. Then we added our Federation rules along with DNS, DHCP and AD flows.

With this base setup we tested user access to the virtual desktop. Things looked good in the NSG but the connection wouldn't complete (we could not get to the VDI instance). We would get the message about taking 15 minutes to create desktop and then eventually the message would disappear and there was nothing. We had missed something.

Troubleshooting

We were having issues getting the desktop delivered and we were not sure what we had missed in the NSG rules (we knew we had missed something obviously but WHAT we did not know). We configured and deployed Azure Flow Monitor and Log Analytics so we could get visibility to what flows where working and what flows were being dropped. I would highly recommend that you setup and use this if you deploy Horizon VDI in Azure. It's the only way to really 'see' what is being allowed or denied by your NSG rules and you can what rule(s) have actually denied your flow.

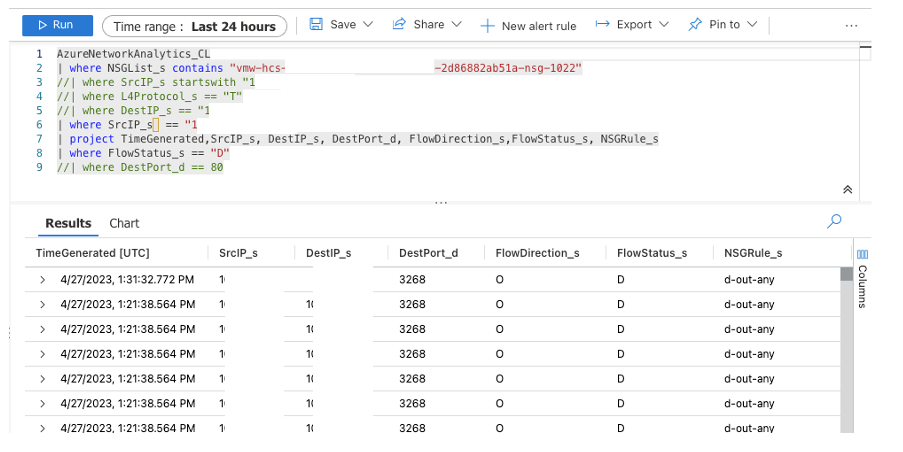

Once we had logging enabled we could go the the analytics workspace and focus on flows that were being denied because they were hitting the d-out-any rule on outbound traffic as shown in the screenshot example below.

Note: Just because you see a flow denied doesn't necessarily mean that you need to open the flow. This is where knowing and understanding your flows is critical. Just opening flows because you see them as denied is a bad practice and not in line with zero trust methodology. Similarly, setting ports to 'any' because you don't know or understand the flow or implementing a bi-directional flow because it's not understood who the originator is are just as bad if not worse ;)

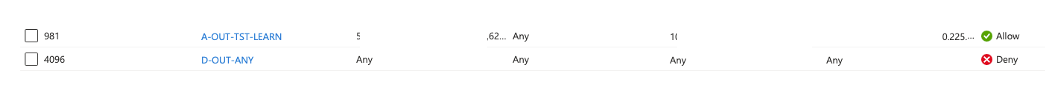

We discovered we had missed some IP addresses needed for our Active Directory Domain configuration as well as a couple of other system flows. How did we troubleshoot them? Well, we created what I call a Learn rule. This rule allows any traffic from any source in the subnet to any destination on any port and we placed this right above the Deny rule. Our example is shown below (IPs and Ports are covered):

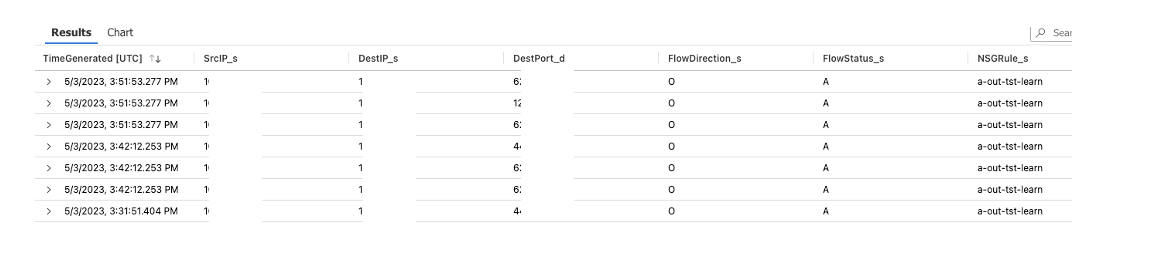

We then launched connectivity to the virtual desktop again. When we see the failed attempt we could then look in the Log Analytics workspace for flows that hit that rule in the NSG as shown below (IPs and Ports are covered):

Here we could determine missed/needed flows. Once those IPs were added to the existing rule and all system flows were accounted for then desktop connectivity was possible.

We had successfully built a base NSG that would be the starting point for virtual desktops requiring micro-segmentation. By applying this NSG to a particular pool and adding only the flows required by the users to execute their job we implemented a form of micro-segmentation.

Summary

Before attempting any type of micro-segmentation you must know the required flows. Not knowing the required flows will only end in frustration and nothing will change (nothing will end up being micro-segmented). Take the time to know and understand the flows needed and then implement the rules. You will most likely not get everything on the first attempt but you should get more than 75% of the flows. Then you can troubleshoot the remaining missing flows and add them as you need.

This is not a pretty solution. This is not the perfect solution nor is it sustainable at scale but it is a solution that meets the current need. We can now onboard temporary users (like developers, etc.), give them a virtual desktop and restrict what they can connect to as it relates to their mission only. In parallel we have proven that we can have containment and control in this area of our cloud based VDI platform.

As I mentioned this is not sustainable at scale so I am already looking for a better way of managing this. Automating the configuration, deployment and management of these NSGs is one option I am looking at (This really is a must have if we want this to be a service). Our cloud team does this today with what they manage. We could have our own DevOps team follow the existing process and standards or, I could wait patiently for NSX+ to be ready for Public cloud. Personally I have high hopes for NSX+ and am waiting somewhat patiently.

In summary, our journey of securing a virtual desktop in a public cloud began with moving (rebuilding) our current infrastructure from shared subscriptions to dedicated subscriptions. This also included the deployment of a Universal Broker to manage all access via a single url. This move was a key foundational piece of the effort to be ready for securing the virtual desktop. Once the pod was rebuilt and we added a new test subnet to the Horizon console we began testing and validating NSG management (After an architecture decision on NSX+ or NSG management). The successful management of NSGs for micro-segmentation has enabled us to prove we could micro-segment a virtual desktop in our environment. We have a few more tests to do on our test platform and then it will be ready to implement in our Production POD when we have the accompanying processes documented.

The Big Why?

Our cloud VDI solution was working as designed so why did we go on this long journey? We did it for several reasons, but the primary ones were:

- Our existing platform was in a shared subscription with other services. Due to the various roles allowed by many in this shared environment we could not always be as reactive (or proactive) as we wanted to be.

- As mentioned above, since this was a shared subscription, sometimes changes to other platforms impacted our platform and changes to our platform impacted theirs.

- We could not easily differentiate Michelin users from long term or short-term partners as they all used the same platform and within the same IP scope.

- If a bad actor was to gain access to our VDI platform there were not strong enough controls in place to limit the damage (All had the same access)

We built our original platform to service the needs we had at the time and with the knowledge we had at the time. Since then, we have learned, and our needs have grown. This new deployment will meet our current needs, be more secure, and allow us to grow into it as our needs grow.

Special Thanks

Special thanks to @david decarlo, @fabien anglard, @naveen bhatt and @girish bharambe who had to do all the hard work so I could write about it and make it sound easy. This wouldn't have been possible without them!