Choosing an Azure Database: An Evaluation of Cost and Performance

Do you have a database that currently runs on an on-prem server or cloud VM, and you would like to switch to a PaaS database in Azure to take advantage of a more evergreen existence?

Perhaps you have an application that uses Excel files or an Access database, and you have been considering moving your data to an Azure cloud database?

Maybe you just have a new application to develop, and you want to start with a database in Azure?

Before you blindly add to the infrastructure cost for your company, why not continue reading : we will compare the cost and performance for several different configurations of Azure SQL and Azure for PostgreSQL.

How much will it Cost?

I have an application that has been in production for about 3 years. It uses an SQL Server Express database on a local on-prem server. For quite some time I have been interested in moving the database to one of the PaaS options in Azure. My primary motivation is a little selfish - I want to escape the responsibility of maintaining the database with the latest version and having patches applied to the database server every month. Of course there are some other great advantages as well:

- The ability to easily scale the database up or down

- Automatic back-ups

- Monitoring

- Lots of metrics that are kept automatically

Because my current database is SQL Server based, Azure SQL seemed to be the best option for me as this would allow me to keep the existing SQL statements in my application with no modifications. However, PostgreSQL is very popular at Michelin and having seen firsthand that it performs well, I was very curious to know which was better. Thus I decided to do some performance testing with real data using actual queries from my application.

I would like to be a responsible employee and avoid spending money on things that are unnecessary (even when it is not my money). My application only has 3 or 4 concurrent users, so I was especially interested in the low-end options in Azure. At the same time, I also want the performance to be at least as good as the current database used for my application.

With these goals in mind, I chose to test the following different Azure database configurations:

|

Database |

Config |

Description |

|

Azure SQL |

DTU

10 |

10

DTUs, 250 Gb storage |

|

DTU

20 |

20

DTU, 250 Gb storage |

|

|

DTU

50 |

50

DTU, 250 Gb storage |

|

|

DTU

100 |

100

DTU, 250 Gb storage |

|

|

GEN 2 |

General

Purpose: 2 vCores, 10.2 GiB memory |

|

|

PostgreSQL Flexible Server |

Burst

1 |

Burstable:

1 vCore 2 GiB memory |

|

Burst

2 |

Burstable:

2 vCores 4 GiB memory |

|

|

Std

D2 |

General

Purpose v3: 2 vCores, 8 GiB memory |

|

|

Std

D4 |

General

Purpose v3: 4 vCores, 16 GiB memory |

|

|

PostgreSQL Single Server |

Basic

1 |

Basic:

1 vCore, 2 GiB memory |

|

Basic

2 |

Basic:

2 vCores, 4 GiB memory |

|

|

Gen 2 |

General

Purpose: 2 vCores, 10 GiB memory |

|

|

Gen 4 |

General

Purpose: 4 vCores, 20 GiB memory |

The table below describes the cost for each configuration. The last column shows the final cost if you want to have separate databases for a Development, Industrialization, and Production environment (it is simply the monthly cost multiplied by three). This was my attempt to raise awareness concerning the whole database cost of the solution. Of course this final cost could be decreased by stopping the databases while not in use or by using a cheaper configuration for Development and Industrialization.

|

Database |

Config |

Cost Criteria |

$/Month |

3 Env ($) |

|

Azure SQL |

DTU

10 |

$0.0202

/ hour |

14.72 |

44.16 |

|

DTU

20 |

$0.0403

/ hour |

29.43 |

88.29 |

|

|

DTU

50 |

$0.1008

/ hour |

73.61 |

220.83 |

|

|

DTU

100 |

$0.2016

/ hour |

147.18 |

441.54 |

|

|

GEN 2 |

$222.24

(Compute) + $145.95 (License) / month |

368.19 |

1,104.57 |

|

|

PostgreSQL Flexible Server |

Burst

1 |

$0.0170

/ hour + $0.115 / Gb storage |

12.99 |

48.27 |

|

Burst

2 |

$0.0680

/ hour + $0.115 / Gb storage |

50.22 |

159.96 |

|

|

Std

D2 |

$0.1710

/ hour + $0.115 / Gb storage |

125.41 |

385.53 |

|

|

Std

D4 |

$0.3420

/ hour + $0.115 / Gb storage |

250.24 |

760.02 |

|

|

PostgreSQL Single Server |

Basic

1 |

$0.0408

/ hour + $0.120 / Gb storage |

30.98 |

92.94 |

|

Basic

2 |

$0.0816

/ hour + $0.120 / Gb storage |

60.77 |

182.31 |

|

|

Gen 2 |

$0.2102

/ hour + $0.138 / Gb storage |

154.74 |

464.22 |

|

|

Gen 4 |

$0.4204

/ hour + $0.138 / Gb storage |

308.18 |

924.54 |

What is a DTU?

For Azure SQL, one of the pricing models is based on Database Transaction Units (DTUs). According to the documentation, a DTU represents a blended measure of CPU, memory, reads, and writes. For me, this is like telling someone who works in miles that the place they are looking for is so many kilometers away. So let's see if we can find a better way to picture what a DTU is.

One of the complex screens in my application contains a master/detail grid with lots of columns. To populate this screen requires collecting and merging data from 7 separate complex queries returning about 2,700 rows in one particular case. I used the metrics in Azure to capture the total DTUs consumed for this complex set of queries. I found that my application would use .1 DTU for this query. In other words, it took 10% of a DTU to query for all of the data necessary to populate a single complex screen.

The Azure documentation explains that doubling the DTUs for a configuration is equivalent to doubling the resources available to the database. However, for me this does not completely clarify whether the DTU configuration is just about setting the price and to what degree it impacts the performance. You will find the clear answer to this in the next section on performance.

PostgreSQL Configurations

For the PostgreSQL configurations, I chose to test both the Flexible Server and the Single Server deployment modes.

With the Flexible Server you get:

- The ability to scale up and down within a great range of cost/server configurations.

- The ability to stop and restart the database server. However, if you stop the database server it will automatically restart after 7 days.

- Minimum storage size is 32 Gb.

- Private access is accomplished through VNet Injection.

For the Single Server:

- You have to choose from Basic, General Purpose, Memory Optimized. Unfortunately, you cannot scale up and down between Basic and General Purpose.

- Minimum storage for the General Purpose tier is 100 Gb.

- Private access is accomplished by adding a Private Endpoint.

With either PostgreSQL server model, you are really purchasing a database server and therefore it is possible to buy one server and create several databases. If you have several small applications, this enables the option to buy one server and add a database for each application thereby splitting the total cost across each application.

Some Other Considerations

If you are moving from an Access database, Azure SQL will most likely provide a quicker transition because it supports auto-increment columns.

There are free database tools for both Azure SQL and Azure for PostgreSQL such as SQL Server Management Studio for Azure SQL, and DBeaver which can be used for either.

You will be pleased to find how easy it is to scale these Azure databases up and down with just a few clicks. With Azure SQL it only takes a few minutes to apply the changes. Azure for PostgreSQL is a little slower but still finishes in under 10 minutes.

What about Performance?

I was able to replicate my production database in Azure for both Azure SQL and Azure for PostgreSQL. This allowed me to do performance testing with 3 years of real data, and made it possible to do a fair comparison between database types since they had exactly the same schema populated in exactly the same order.

I created both databases in a sandbox environment in Azure, but I did not want to invest in the time and effort to create them within their own Virtual Network and request firewall rules to allow me to connect from on-prem. Therefore, I chose to use Azure Functions to do the performance testing since these could connect to the database internally through Azure (by allowing access from Azure services).

Single-user Performance

One of the complex screens for my application has 5 tabs which each display a master/detail grid. I measured the performance of the complex set of queries that my application would use to populate all 5 tabs requiring 31 separate queries returning a total of 17,921 rows. I repeated the set of queries 10 times with a 1 second delay between each query so that I could measure an average query time.

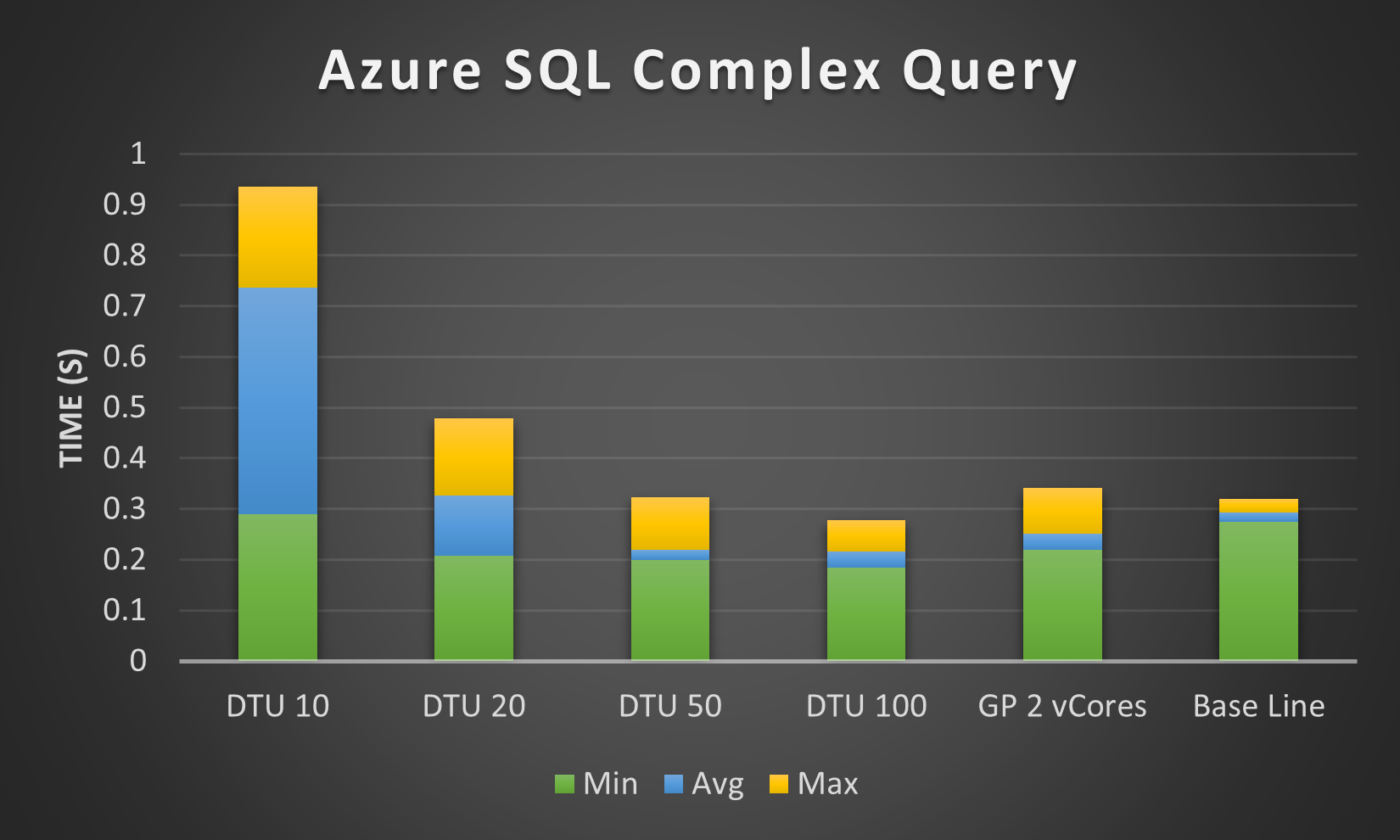

The chart below shows the results for each Azure SQL configuration including the Base Line (the current production database included to provide a kind of reference point). You can see from these results that increasing the DTUs for the configuration does in fact increase the performance, so it is not just a setting for pricing.

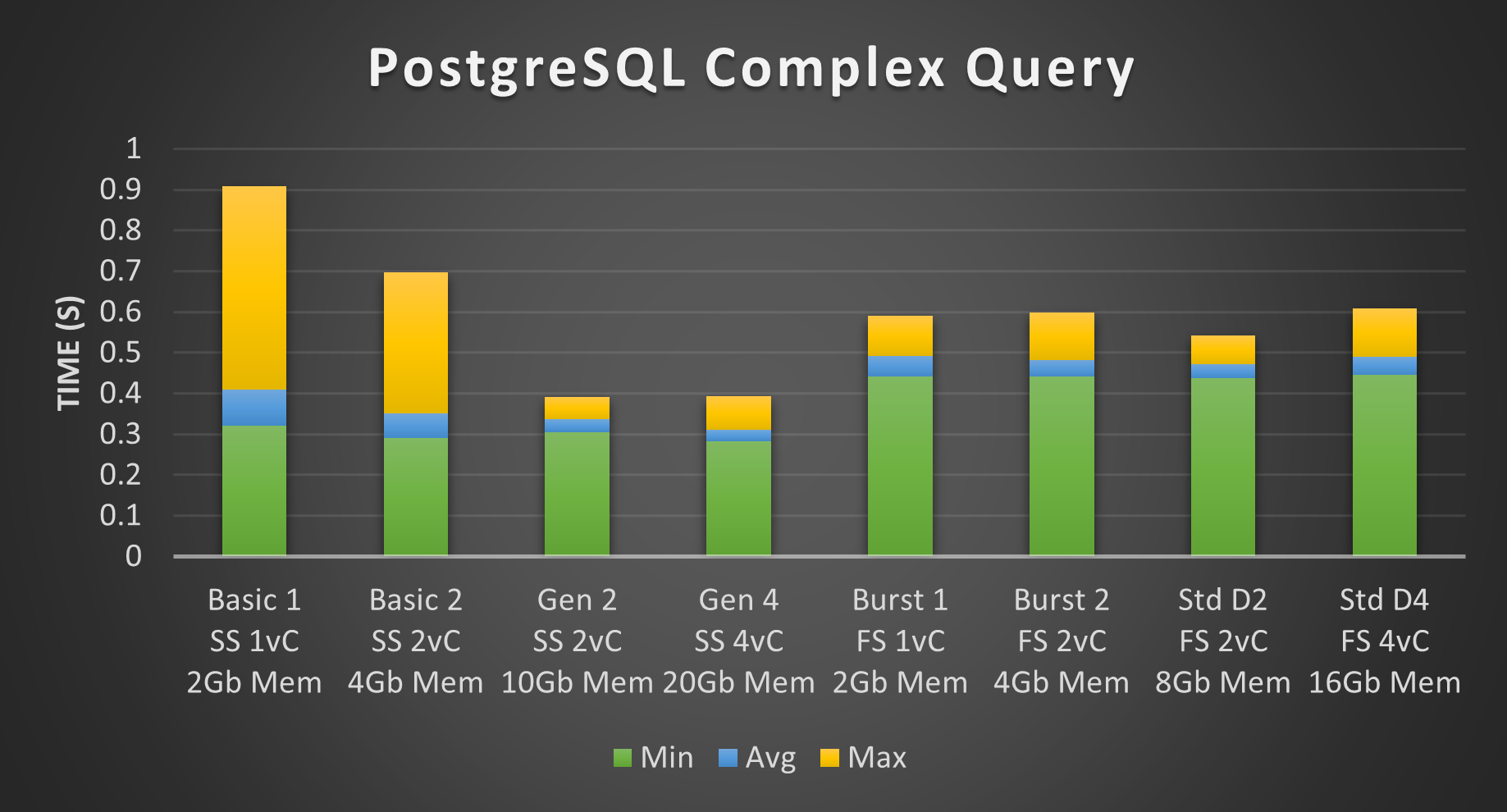

The chart below shows the PostgreSQL performance with the Single Server configurations in the first 4 columns and the Flexible Server configurations in the last 4 columns. You will find a comparison of PostgreSQL and AzureSQL in a later section, but there is one particular difference that is worth noting now. The delta between the average and minimum query time (the blue section in the middle of each column) was more invariant with respect to the server configuration for PostgreSQL than Azure SQL. Maybe this is an indication of a more efficient query engine.

Multi-user Performance

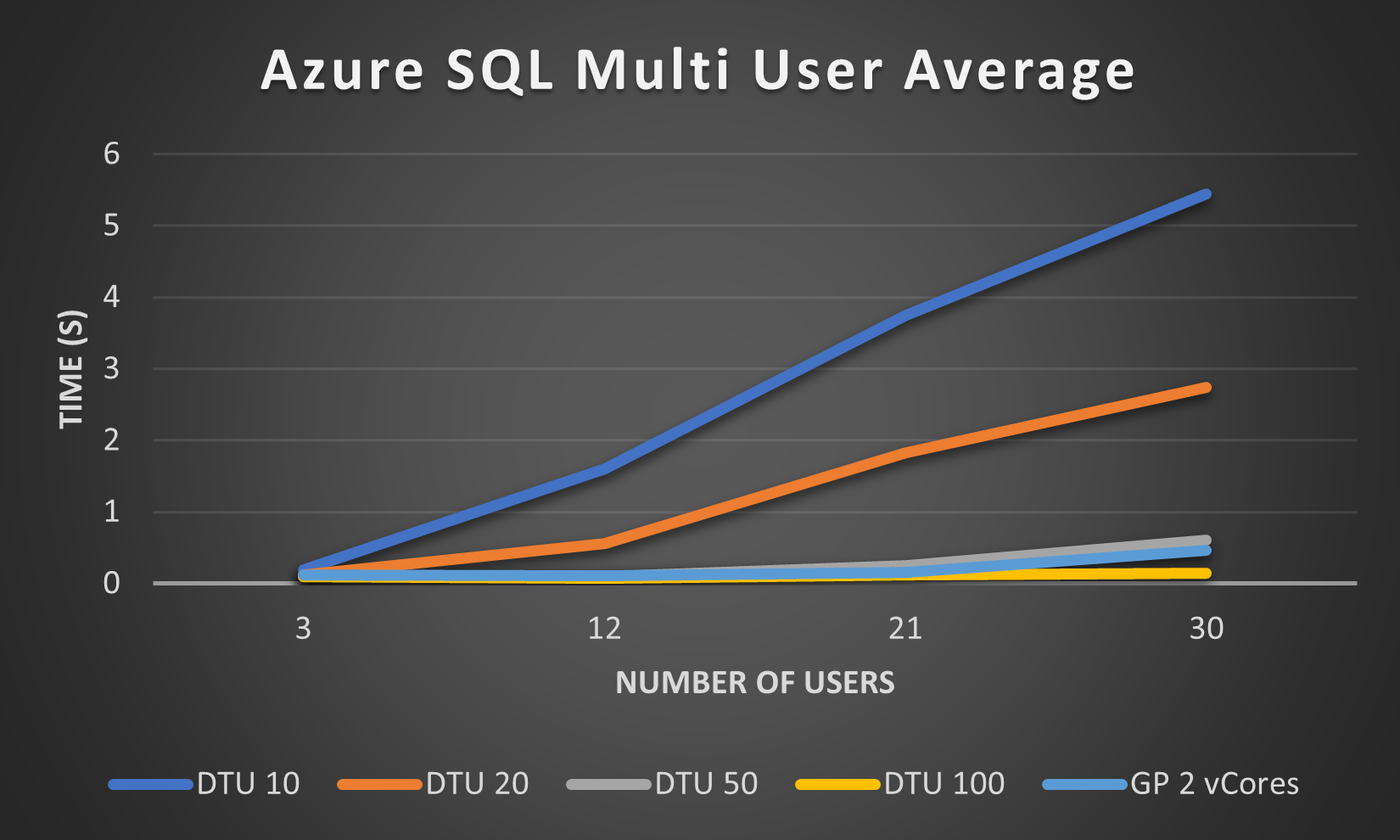

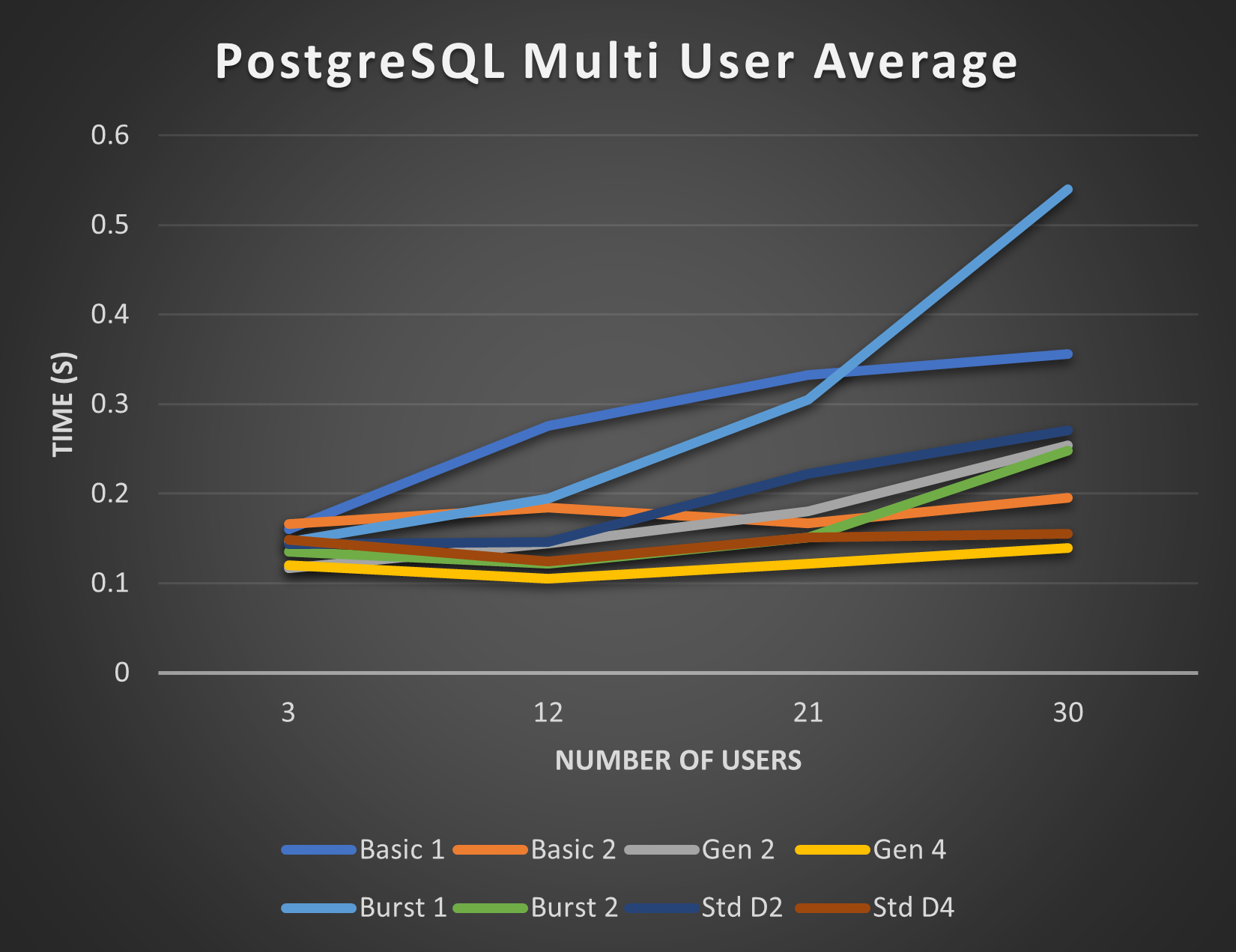

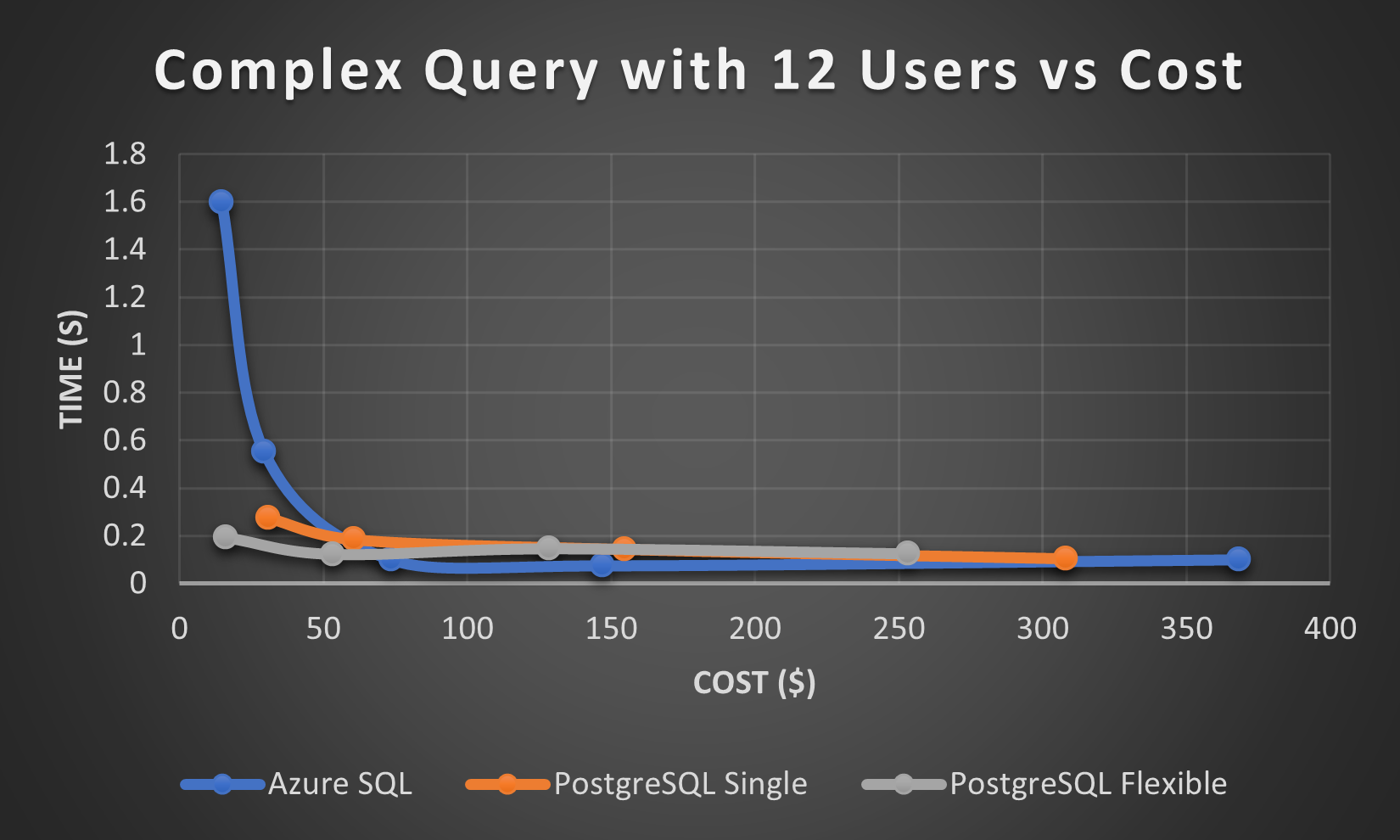

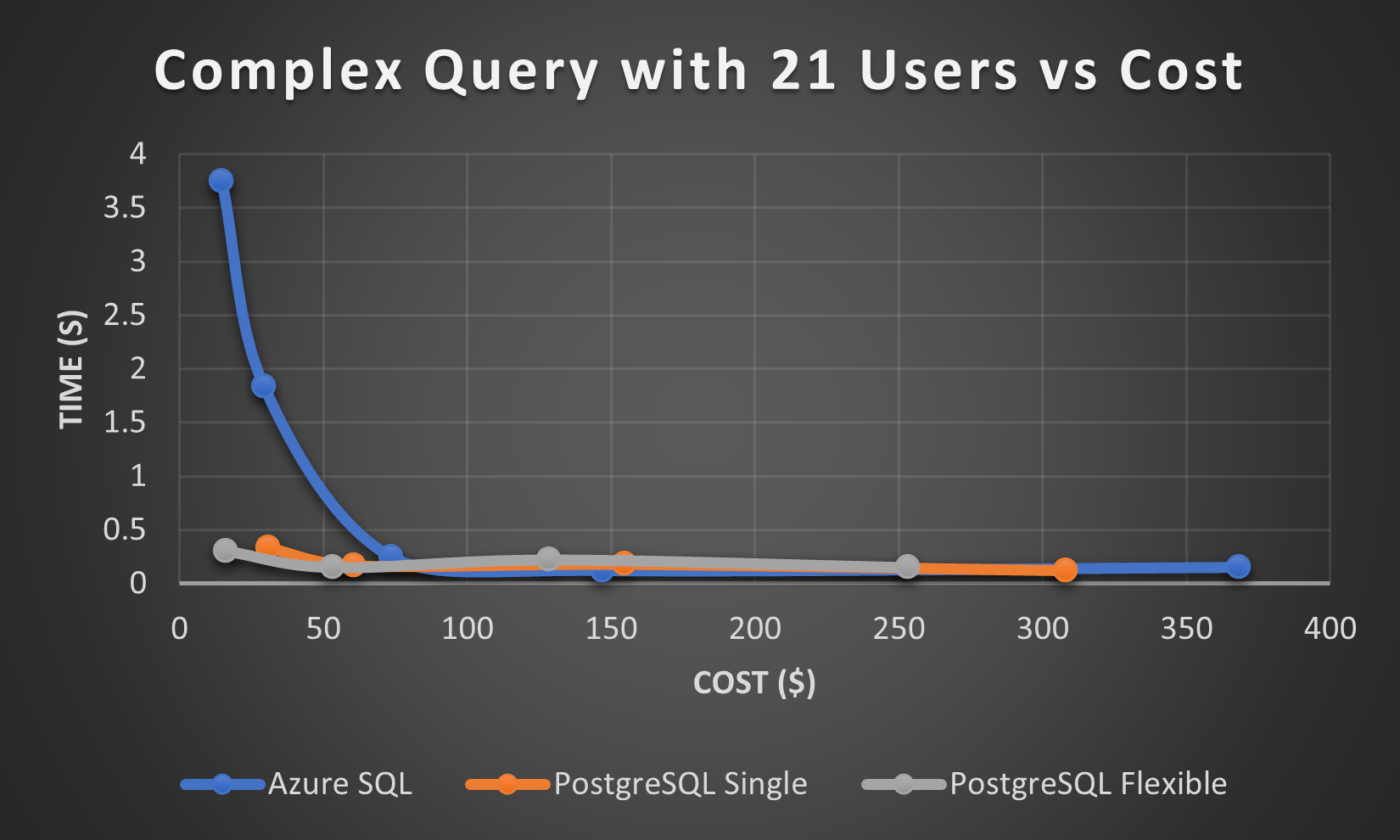

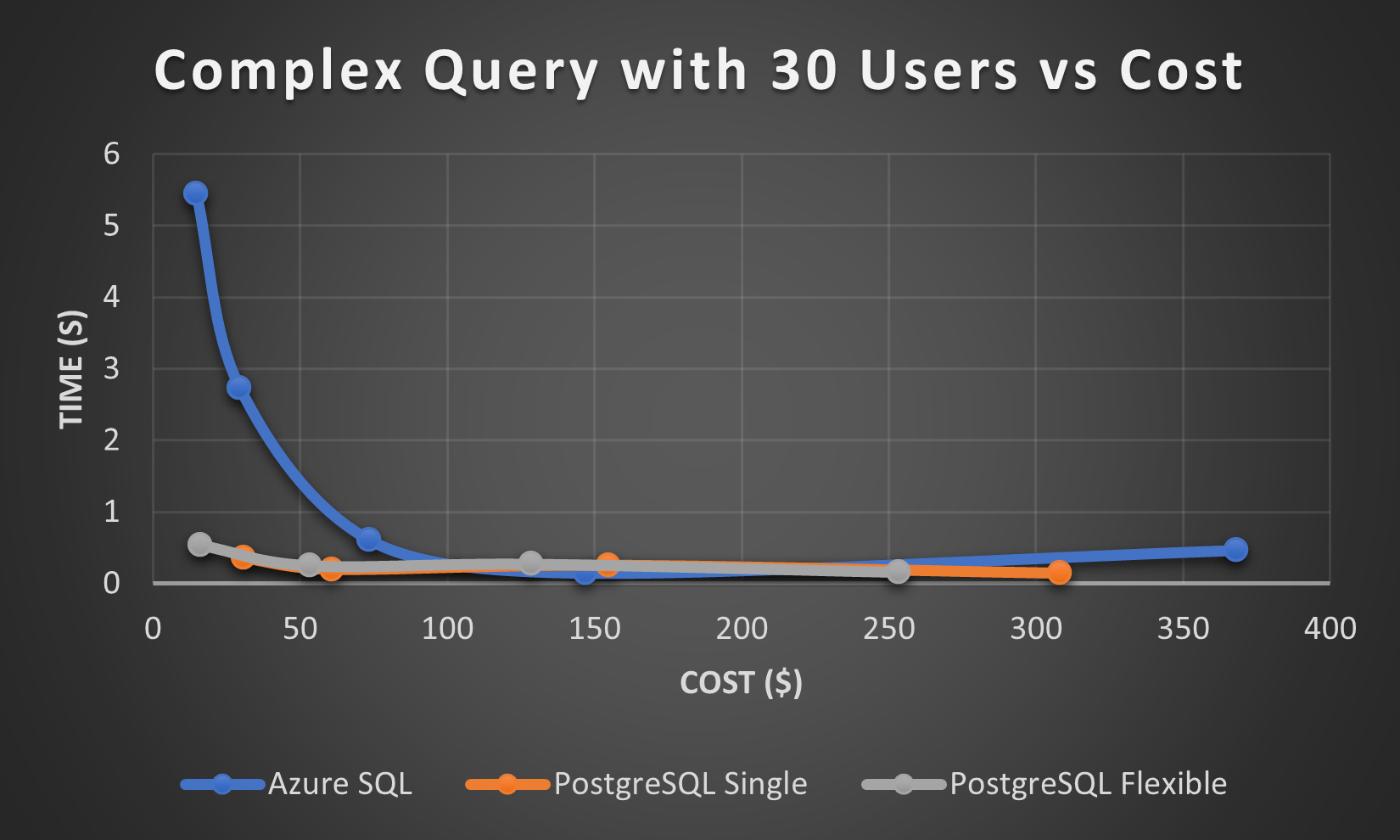

I also wanted to try to measure the impact of multiple users executing similar queries concurrently. For this test I executed an Azure Function multiple times in parallel. This may not be a true multi-user test because the Azure Functions can be processed using multiple threads, but I think it still gives a very good measure of the impact of multiple concurrent users. I ran these tests simulating 3, 12, 21, and 30 users executing the complex queries from my application necessary to populate a single screen. The query for each concurrent user is similar but not exactly the same. I used 12 different queries most of which involved 7 separate complex selects returning between 2,000 and 7,000 rows. The charts below shows the results for Azure SQL and PostgreSQL respectively.

Other Tests

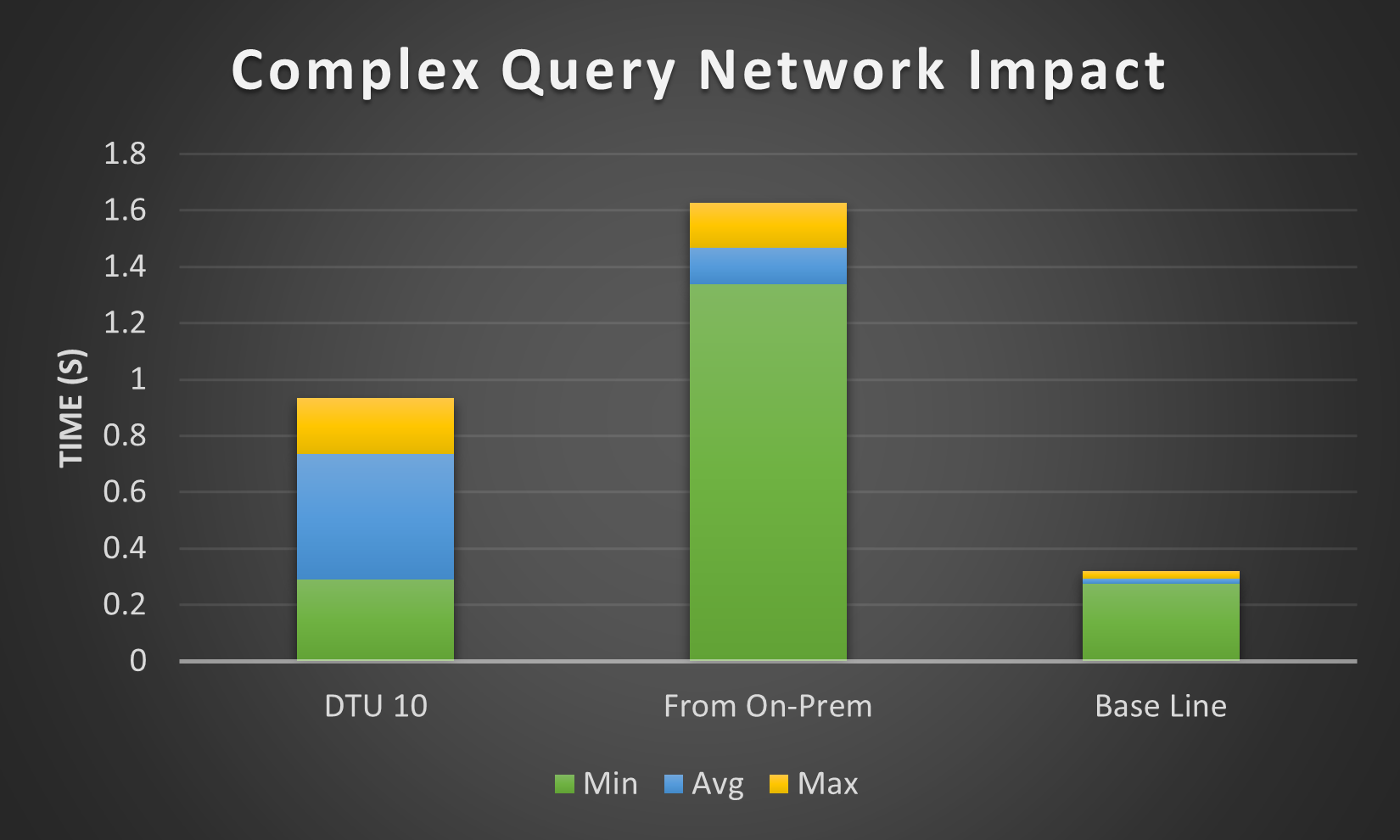

Since my Azure Functions were communicating with the database internally through Azure, I was curious to know whether there was any kind of degradation when connecting from on-prem to a database within an Azure VNet using a Private Endpoint. I just happened to have such an Azure SQL database configured as DTU 10. The chart below shows that there is a moderate impact which is most likely the result of network latency. Note that for the Base Line case (the current application database), the on-prem database server is on the same campus as the laptop from which I ran the tests.

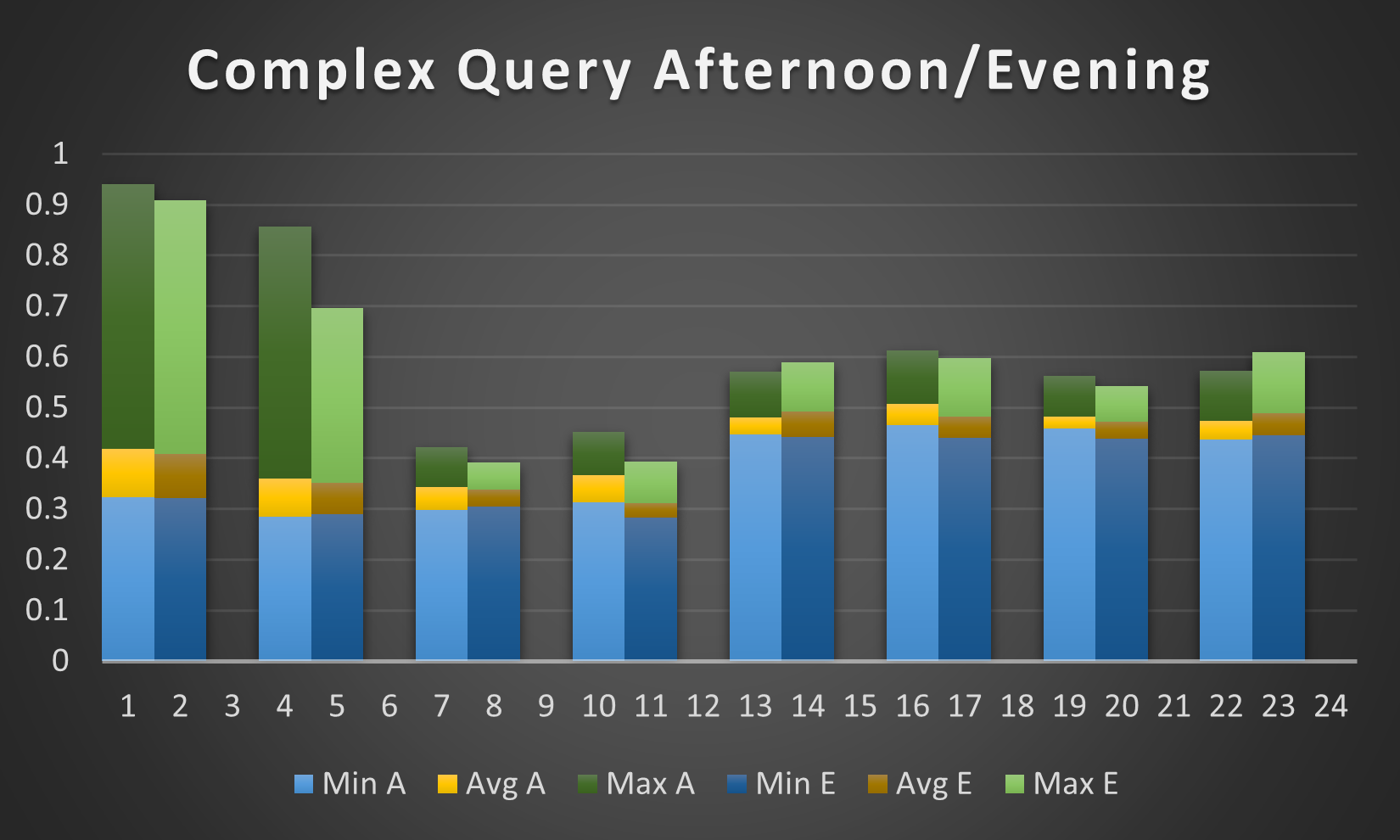

I scheduled my Azure Functions to run in the evenings (after 6 pm in the East US 2 region). I decided to also run some tests during working hours (2 pm in this case) to see if the time of day might have any impact on performance. In the chart below you will see the PostgreSQL performance in the afternoon (A) next to the evening (E). The results were similar for Azure SQL and indicate that using the database in the afternoon was relatively the same as the evening.

Comparison of AzureSQL and PostgreSQL

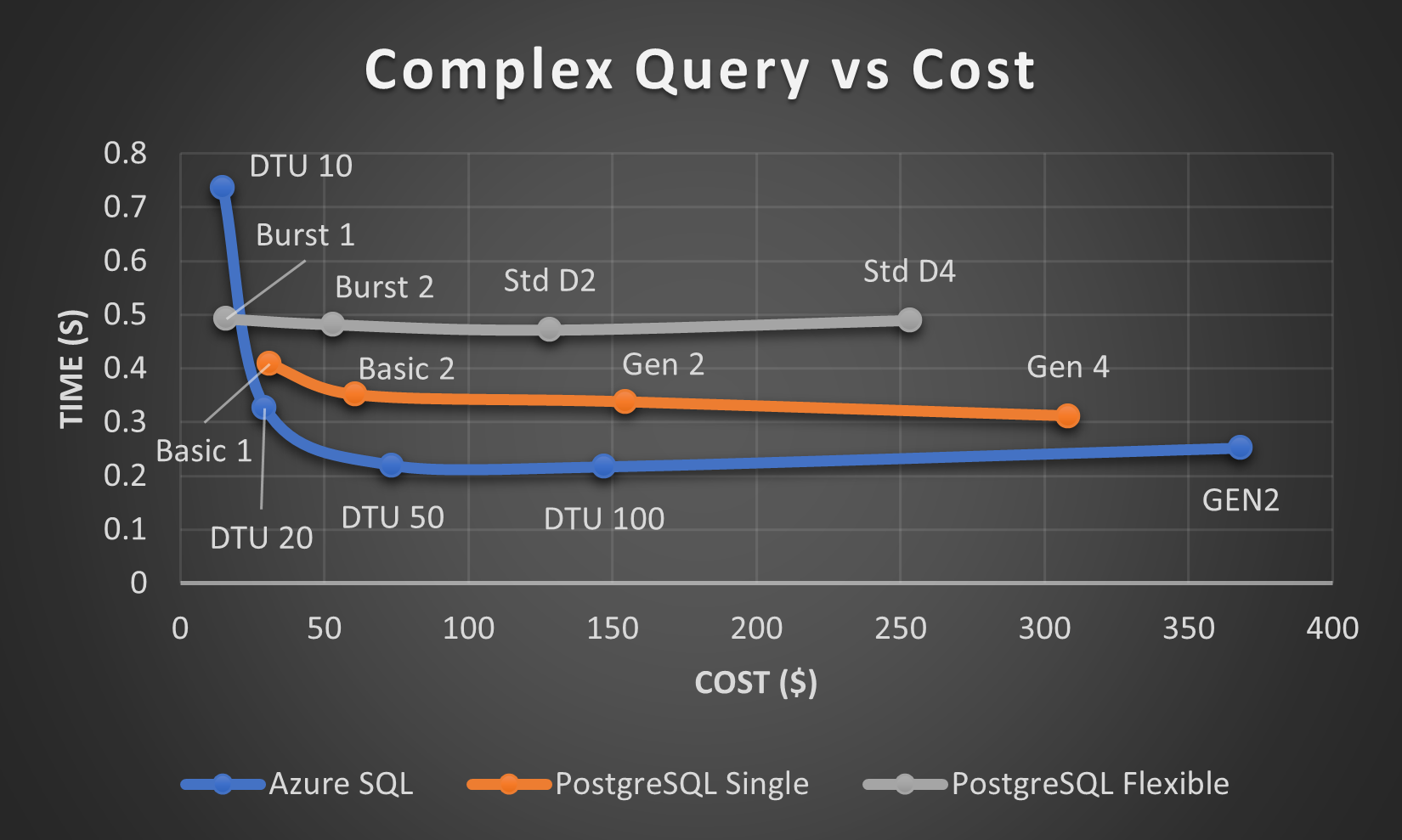

In order to combine AzureSQL and PostGreSQL into the same chart, I have used cost as the common attribute across configurations. The chart below shows the single-user comparison:

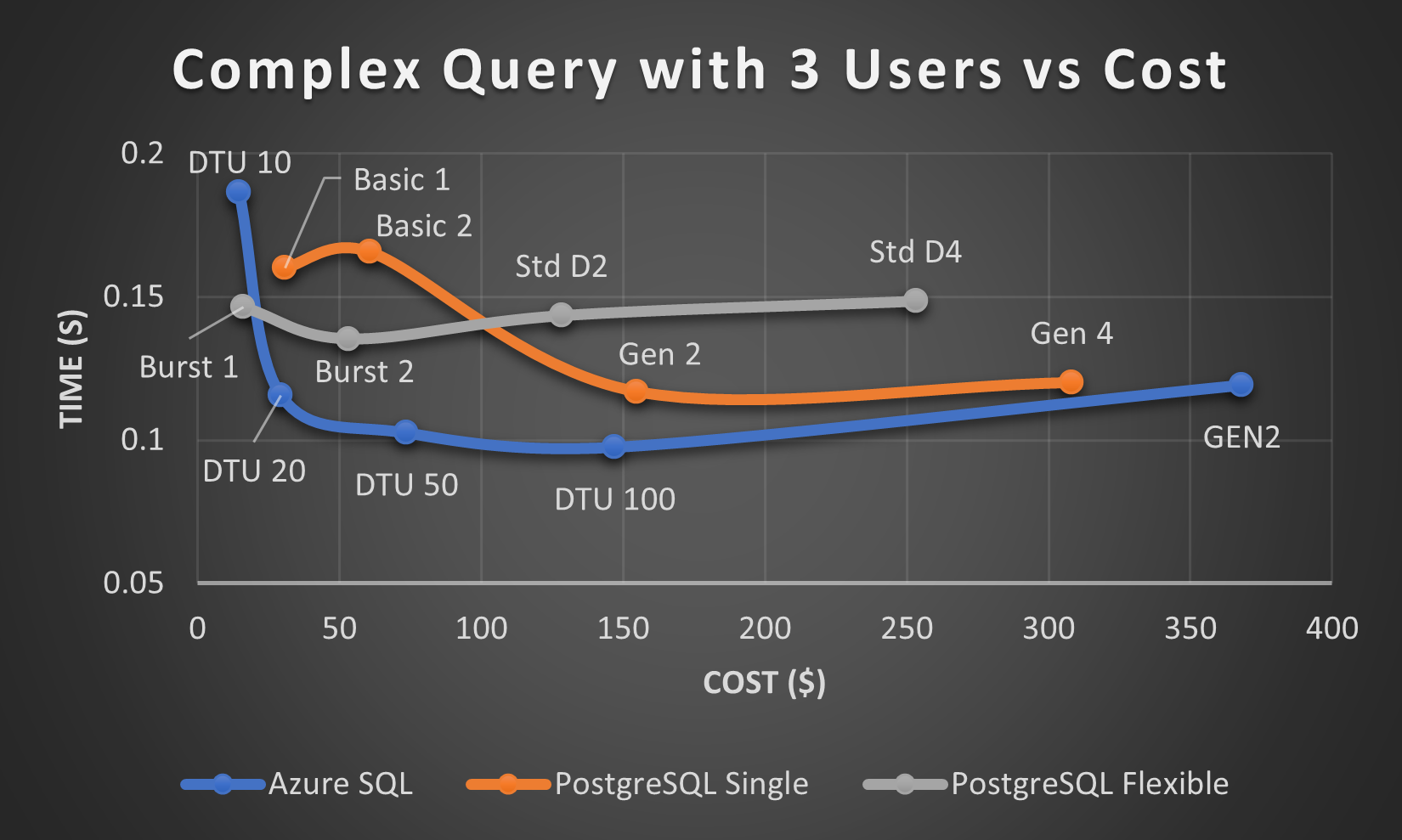

The chart below shows the comparison for 3 concurrent users which looks very similar to the single-user performance.

With 12 concurrent users, the results start to take on a different form. The next three charts show that even the low-cost PostgreSQL configurations provide relatively the same performance even when increasing the number of concurrent users.

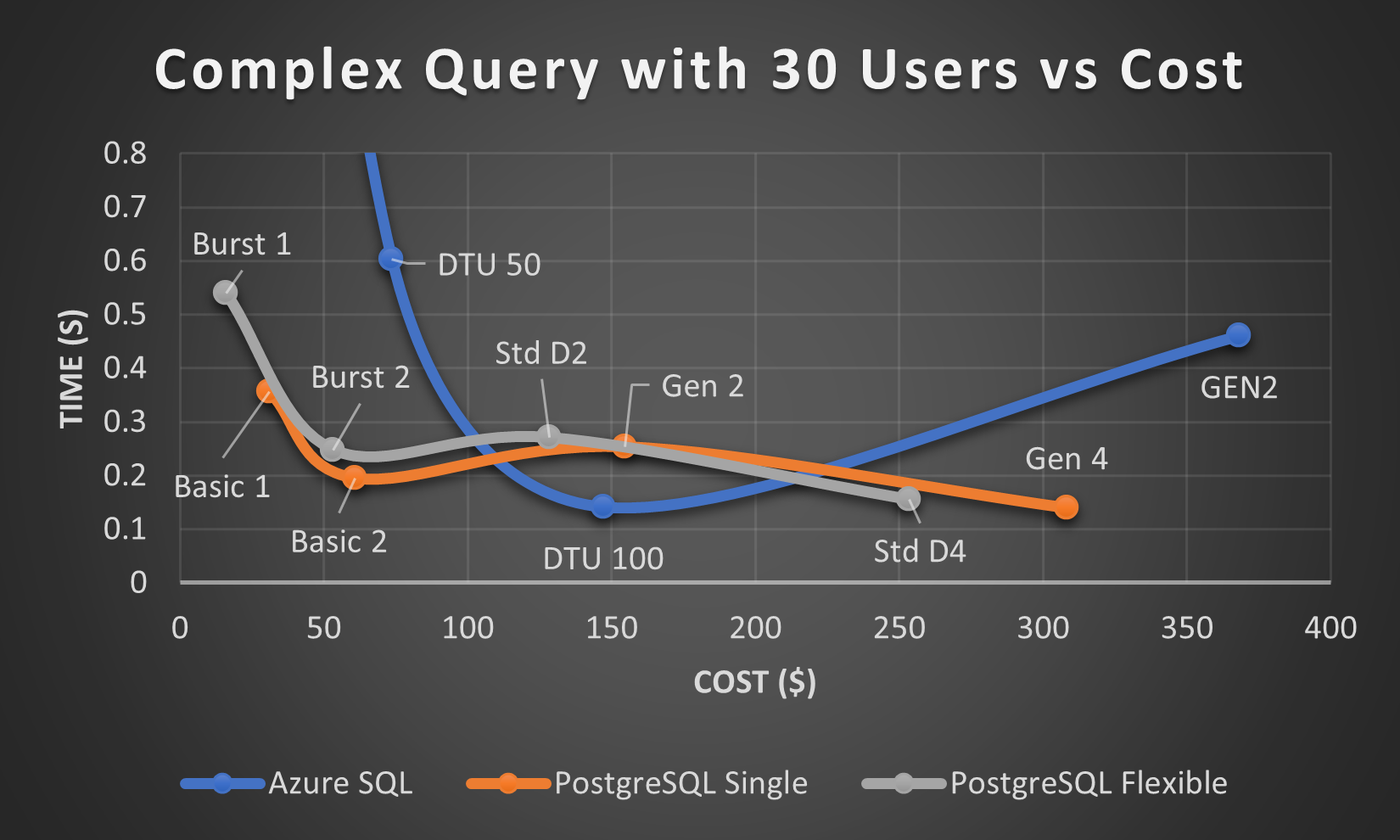

In order to see the comparison more clearly for 30 concurrent users, the chart below zooms in on the performance between 0 and .8 seconds:

Conclusion

With both types of databases, the performance curves are fairly flat past the lowest cost configurations even with 30 concurrent users. This shows that if you have an application with a small number of users, you don't really need one of the high-end configurations.

If you prefer using Azure SQL, the DTU 20 configuration starts to provide good performance with a small number of users. Of course if over time you end up with more concurrent users, you can very easily and quickly scale up to improve the performance. For my application, the DTU 50 configuration will give me comparable performance as with my current SQL Server Express database.

For PostgreSQL, there is little performance difference between the Flexible Server and Single Server configurations. The Flexible Server option provides the better ability for scaling up and down between several pricing models and does not require adding a Private Endpoint in order to provide private access.