Open Sourcing Kafka Connect transforms for Qlik Replicate

We're very pleased to announce Kafka Connect transforms for Qlik Replicate in open source. This Single Message Transformation library aims to ease a Change Data Capture event integration into the Kafka ecosystem.

In a previous post, we were explaining how Michelin development teams use Qlik Replicate to capture database change events and push them to Apache Kafka. Having the database events in Kafka offers a lot of opportunities like processing them to build new insights or use a Kafka-Connect configuration to persist these events "as-is" into another database like an old fashion replication tool.

Yes, you read right, we combine a Change Data Capture tool, Kafka and Kafka-Connect to propose a replication capability to our teams.

Wait wait ... can't Qlik Replicate do replication?

Qlik Replicate as replication tool

First things first, Qlik Replicate is initially a replication tool, and a great one. Companies have been using it for a long time to replicate data from one repository to another. It has been using the database logs to capture events way before the term "Change Data Capture" (aka. CDC) was invented. With the raise of the CDC's hype, Qlik Replicate had an opportunity to take a new position in the integration technologies landscape.

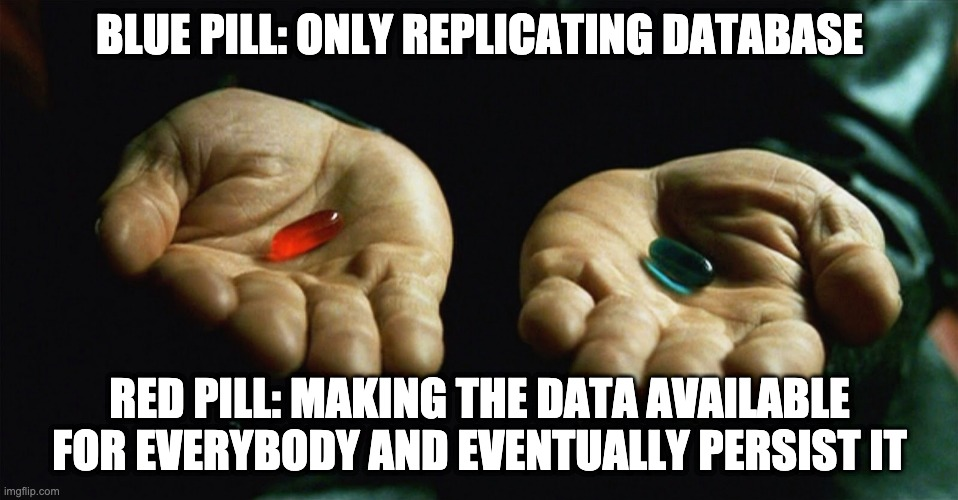

So yes, you can replicate a Source A to a Target B with Qlik Replicate but... what if a new project (Target C) need the same data? The naive response is to create another replication link between Source A and Target C.

Qlik Replicate builds point-to-point integration links with all the pitfalls associated (source-target strong coupling, spaghetti of integration flows, data duplication etc).

One way to solve this issue could be to use the "Stream log" feature. The initial purpose of this solution was to reduce the number of database connection on the source by asking Qlik replicate to copy locally the source database event log. First, we define a flow between the Source A and the CDC tool: the software will copy the database's log. Secondly, we define the flows between Qlik Replicate and the targets. Each target can be configured independently (eg. to consume only a set of tables). From a technical point, the target will not consumes the actual database event log but a copy of it available in Qlik Replicate servers.

While being very interesting, this feature still has some limitations. The copy of the database log managed by Qlik Replicate is mutualized for all the target. It means configuration like "retention" (how much time a data is kept by the local copy) is the same regardless of the target usage. From a high level perspective, it could looks like a cheap implementation of the PUB/SUB pattern but if you really want to achieve this pattern then delegate this concern to a dedicated message broker system.

On top of that Qlik replicate can not compete with Kafka when it comes to resiliency or scalability.

If your unique objective is to replicate a database to another one (and there are plenty of meaningful use-cases) then go with Qlik Replicate and stop reading here. But if your first ambition is to make your application's data available and persist it then dive into the white rabbit hole ;-)

Kafka Connect JdbcSinkConnector to the rescue

In our journey of modernization, we have been using Qlik replicate to capture legacy database events and now this data is avaibable in Kafka. Most of the consumers process the information on the fly to build new insights but we also have this Business Intelligence project who desperately needs the data coming from the legacy database.

The BI project has a classic data-preparation chain. First, they have a "Data store" which is a dump of the source. At this point they keep the data and the structure just as it is in the source. Then a set of ETL jobs will process the data and persist the result it to a Data Warehouse. At the end, we plug a visualization tool.

Note: yes all the data-prep could have been done in Kafka with stream processing but Rome wasn't built in a day. We can't rewrite each application just because we have a new technology. Here we made a quick win helping a project by providing the data which was already available in Kafka.

In the Kafka ecosystem, Kafka-Connect is specifically designed to integrate Kafka with the rest of the world. A source connector will interface a data source to Kafka where a sink connector will offload data from Kafka to a target.

Back to our scenario, we want to persist data coming from Kafka to the BI "Data Store" which appears to be an Oracle Database. JdbcSinkConnector looks like the perfect tool.

The Kafka Connect JDBC sink connector allows you to export data from Apache Kafka® topics to any relational database with a JDBC driver.

This connector will transform a message like { "id": 1, "email": "john@doe.com"} in the Author topic to the SQL statement INSERT INTO Author (id, email) VALUES (1, "john@doe.com"); That means the structure of the message in Kafka will be the structure (ie. columns) of the target tables.

When using Qlik Replicate, the message pushed to Kafka contains a lot of metadata like:

{

"data": {

"id": 1,

"email": "foo@bar.com"

},

"beforeData": {

"id": 1,

"email": "john@doe.com"

},

"headers": {

"operation": "UPDATE",

"changeSequence": "100",

"timestamp": 1000,

"streamPosition": "1",

"transactionId": "1",

"changeMask": "1",

"columnMask": "1",

"transactionEventCounter": 1,

"transactionLastEvent": true

}

}As you may guessed, the structure is way to complex to JdbcSinkConnector and will need some pre-processing.

Kafka Connect transforms for Qlik Replicate

Michelin IT is really proud to announce we have open sourced the Kafka Connect transforms for Qlik Replicate. This library is implementing the API "Single Message Transformation" (aka. SMT) proposed by Kafka Connect. SMTs permits to declare operations to apply on messages when they are processed in Kafka connectors.

The Kafka Connect transforms for Qlik Replicate will help to transform this complex structure

{

"data": {

"id": 1,

"email": "foo@bar.com"

},

"beforeData": {

"id": 1,

"email": "john@doe.com"

},

"headers": {

"operation": "UPDATE",

"changeSequence": "100",

"timestamp": 1000,

"streamPosition": "1",

"transactionId": "1",

"changeMask": "1",

"columnMask": "1",

"transactionEventCounter": 1,

"transactionLastEvent": true

}

}to a message ready to be consumed by the JdbcSinkConnector we mentioned above

{

"id": 1,

"email": "foo@bar.com"

} Our Kafka-Connect configuration will look like this

"transforms": "extract", //<1>

"transforms.extract.type": "com.michelin.kafka.connect.transforms.qlik.replicate.ExtractNewRecordState", //<2>1- We define the transformation named extract

2- The extract transformation will be using the com.michelin.kafka.connect.transforms.qlik.replicate.ExtractNewRecordState type (named of the SMT Java Class).

Now, every time the JdbcSinkConnector will try to persist a message coming from Qlik Replicate, the SMT will extract the database record data before being push to the target data store (insert/update/delete and even alter table changes).

Kafka Connect transforms for Qlik Replicate proposes more advanced configuration (specially for delete management), look the project documentation for further details.

Conclusion

This post explain the opportunity to combine Qlik Replicate with Kafka and Kafka-Connect as database replication solution.

Kafka Connect transforms for Qlik Replicate is a new Open-Source Kafka Connect transformation that can be used to easily persist Qlik Replicate change events present in your Kafka topics.